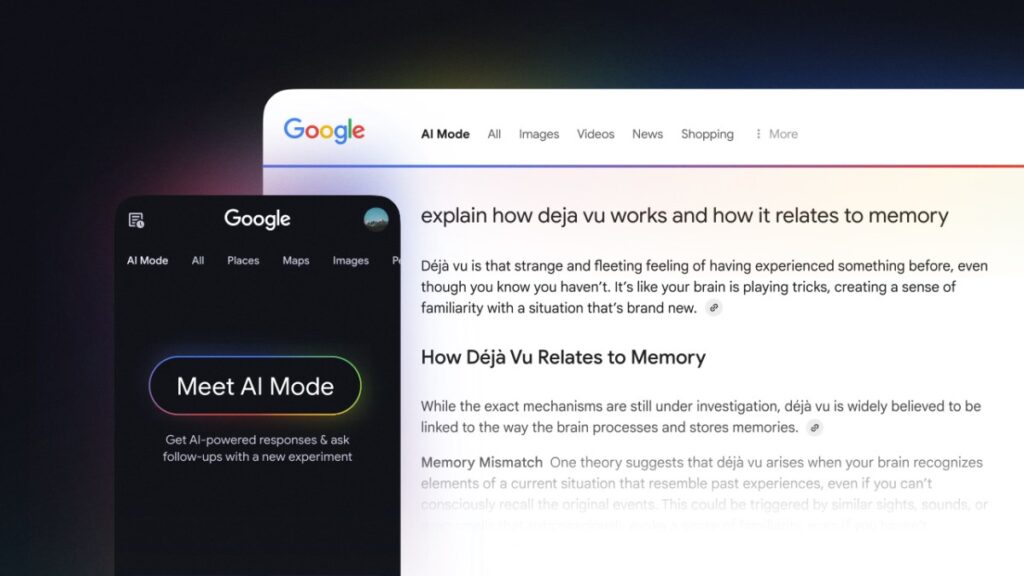

Google is searching for new “AI Mode” experimental features that appear to use popular services such as Perplexity AI and Openai’s ChatGPT search. Tech Giant announced on Wednesday that the new mode is designed to be complex and designed to allow you to ask multipart questions and follow-ups.

AI mode has been deployed to one Google Premium Subscriber starting this week and can be accessed via Google’s experimental arm, Search Lab.

This feature uses a custom version of Gemini 2.0 and is especially useful for questions that require further investigation and comparison thanks to advanced inference, thinking and multimodal features.

For example, you could ask, “What is the difference between the sleep tracking features of smart rings, smartwatches, and tracking mats?”

AI modes can provide a detailed comparison of what each product offers and a link to articles that draws information. You can then ask follow-up questions such as, “What frequently happens to your heart rate in deep sleep?” To continue the search.

Google says in the past it used multiple queries to compare detailed options and explore new concepts through traditional search.

AI mode allows you to access web content, but you can also tap on real-time sources such as knowledge graphs, real-world information, and shopping data for billions of products.

“What we’re testing is that we’re asking questions that are about twice as long as traditional search queries. They’re also asking follow-up questions about a quarter of the time,” Google Search’s vice president of product, Robby Stein told TechCrunch in an interview. “And I think what really gets to these tough questions is that they need more interactions and there’s an increasing opportunity to do more with Google searches, and that’s what’s really exciting to us.”

Stein has heard that Google has deployed an overview of AI, a feature that displays snapshots of information at the top of the results page, so users want a way to get a way to find more ways to search for answers powered by this type of AI.

AI mode works using a “query fan-out” technique in which multiple related searches are issued simultaneously across multiple data sources and the results are combined in easy-to-understand responses.

“This model has learned to really prioritize facts and back up what we say through information that can be verified. It’s very important and we pay special attention to areas that are very sensitive,” Stein said. “So, this could be healthy as an example, and if you’re not confident, you might actually respond with just a web link and a list of web URLs. Given the context of the information available and how confident it is in reply, it’s best and most useful. This doesn’t mean you never make mistakes. Just like the new kind of new, cutting-edge AI technology being released, there’s a very high chance of making a mistake.”

As this is an early experiment, Google points out that it will continue to improve the user experience and expand its functionality. For example, the company plans to make experiences from a variety of sources, including user-generated content more visual and represent surface information. Google teaches models to determine when to add hyperlinks to responses (such as booking tickets) or prioritize images and videos (such as how-to queries).

Google One AI Premium Subscriber can access AI mode by opting out the Search Lab, entering a question in the Search Bar and tapping the “AI Mode” tab. Alternatively, you can navigate directly to google.com/aimode to access the features. On mobile, you can open the Google app and tap the “AI Mode” icon under the search bar on your home screen.

As part of today’s announcement, Google also shared that Gemini 2.0, a US AI overview, has been launched. The company says that AI overviews will start with coding, advanced mathematics and multimodal queries to help with more difficult questions. Additionally, Google has announced that users no longer need to sign in to access AI overviews, and that the feature is being deployed to teenagers as well.

Source link