On Wednesday, Google introduced a new experimental feature called AI Mode Search during its search. Built on a custom version of the Gemini 2.0 model, this addition aims to change the way people interact with search by making them more conversational and multimodal. With AI overview already reaching over 1 billion users, AI mode searches will consider more by dealing with complex questions in a more dynamic way.

In the X post, Alphabet CEO Sundar Pichai shared that AI overview is one of the most popular features in searches and will be continuously improved as the model progresses. Gemini 2.0 now enhances coding, advanced mathematics and multimodal queries responses in the US. This experience is stronger than ever.

“Today, we also introduce the latest Labs experiments for search: AI mode. Get AI responses using Gemini 2.0’s advanced inference, thinking, multimodal features + new ways to explore more web,” says Pichai.

Starting today, AI mode is being deployed to Google’s AI Premium Subscribers. You can opt-in to the lab. Similar to AI overview, AI modes continue to improve with time and user feedback.

AI Mode is deploying one AI Premium Subscriber to Google today and opting in to labs. And, like the AI overview, AI modes get better with time and feedback. Get more details here: https://t.co/k9qfhh83hi

– Sundar Pichai (@sundarpichai) March 5, 2025

What is Google AI Mode Search?

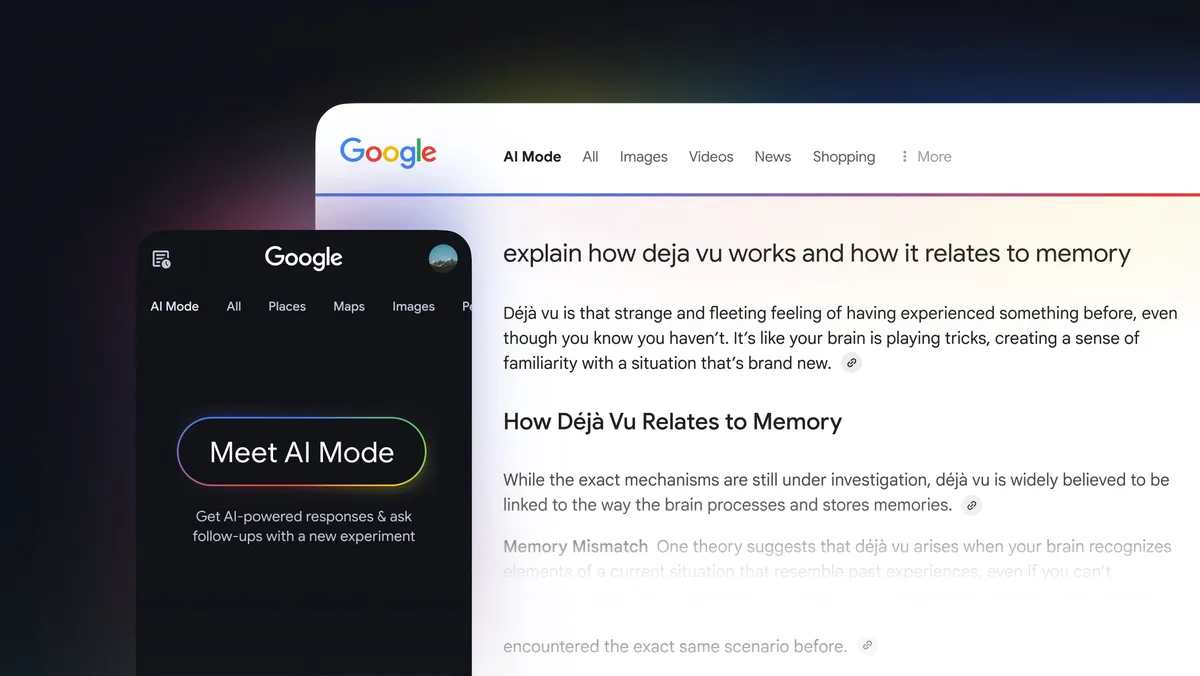

AI Mode Search is the latest step in Google’s Generated AI, designed to answer detailed, multipart questions that standard search often struggle with. It not only displays a list or summary of links, but also generates a full-page response based on the query. It is integrated directly into Google Search, allowing users to ask follow-up questions, dig deep into topics, and get a combination of real-time web data and advanced inference.

For example, if someone asks, “What is the best time for outdoor photography in Boston this week?”, AI mode can analyze real-time weather data, suggesting the best day and time (such as Golden Hours), and provide additional links for details. “How do you know how and where do you need to go?” AI breaks down the questions into small searches, piecing together the findings and presents clear answers.

The important technique behind this is “query fan-out.” AI performs multiple searches in parallel between different sources, bringing them all together in one response. AI modes go beyond the AI overview by providing improved inference, multimodal features (such as images and audio), and chat-like interfaces that support ongoing conversations.

How it works and who can access it

AI mode fits Google search, just like any other filter. Users enter a query and tap the “AI Mode” button next to existing options such as images and videos. You can also access it via “deeper” shortcuts in the AI overview. Once activated, the response will appear in conversational format, with the original query at the top and the follow-up question fields at the bottom.

First, Google has deployed this to Google Google. US AI Premium Subscribers pay $19.99 per month. You can opt in through a search lab where Google tests experimental features. The first focus is those who frequently search for AI-driven answers, especially those who already add “AI” to their queries. Currently, access is limited, but Google has no timeline set, but plans to expand over time.

Why AI Mode is Important

This movement illustrates a change in the way people search for information. Traditional searches are best for raw data on links, snippets and ads, but in many cases users often piece things together themselves. Google felt pressure to evolve with the rise of AI-based assistants such as ChatGpt and Prperxity. AI mode is its response, combining access to web data from sources such as knowledge graphs and shopping graphs with the ability to process information and reasons through complex queries with Gemini 2.0’s ability to.

The AI overview, which was widely launched in 2024, already serves more than 1 billion users. With Gemini 2.0 reinforced these overviews, Google shows more engagement when you start with coding, advanced mathematics and multimodal queries. People spend more time asking more specific questions and searching for longer. AI modes are built on this by meeting deeper research needs, such as comparing sleep tracking devices and understanding abstract concepts.

Challenges and future possibilities

Just like with AI-equipped tools, AI modes have challenges. Google acknowledges that early stage AI can misinterpret intentions or introduce biases, even when it aims for objectivity. During this testing phase, we plan to fine-tune the functionality based on user feedback. Future updates include richer visuals (such as images and videos), improved formats, and new ways to link to web content.

The long-term vision is ambitious. AI modes are not just a tool to raise links, but you can turn your search into an active assistant. Future updates may handle more complex tasks, such as planning trips, troubleshooting technical issues, and research summary, but all make the information fresh and reliable.

What’s next?

Google’s launch of AI-mode search shows an important shift in its approach to AI-powered search. As testing continues with Google One AI Premium subscribers, the company will improve the experience based on user behavior and feedback. Those interested in joining WaitList can sign up to the Google Search Lab page.

This feature represents a major evolution in the way people find information. AI mode suggests a future where getting answers feels more conversational, whether someone casually searches for deeper research or relying on AI or not.

Source link