Earlier today, Google announced the Gemma 3. This is a set of open source AI models that run on a single GPU or TPU designed to make AI development faster and more accessible. This contrasts with rivals like Deepseek’s 6R1, burning dozens of Nvidia chips. It is a bold statement that advanced AI doesn’t necessarily need a silicon army to compete.

But this isn’t just Google Flexing. This is the latest in a growing up rebellion that has been brewed for months. Imagine the Silicon Empire, a king caught up in the golden throne, is not challenged. Until the rebels began to create their own crowns. This is the current change in the world of AI hardware.

Longtime GPU Titan Nvidia faces challenges from high-tech giants like Google, Openai and Meta. Is Nvidia’s governance under threat? Let’s break it down.

Openai will cause fire in February

The first strike landed earlier this year. On February 5, 2025, Openai revealed that Openai is nearing completion of its first custom AI chip, with the aim of reducing its dependence on Nvidia. This was not a side hustle. It was a clear strategy to promote next-generation models without relying on Nvidia hardware. For a company with huge AI ambitions, this was a big move. If Openai doesn’t need Nvidia, who will do it?

“Openai is planning to reduce its reliance on Nvidia by creating its own AI chip. The company will complete its first custom chip design in the coming months and send it to Taiwan Semiconductor Manufacturing Co (TSMC) for production.”

Meta will enter the battle on March 11th.

Just yesterday, Meta jumped into a fight. The social media giant has begun testing its first in-house AI chip designed for both training and inference, reducing the sky high costs associated with Nvidia’s GPUs. Meta’s goal? Complete control over AI operations without passing billions to external suppliers. This is a calculated movement that is part of a larger shift. This is Hyperscalers that cut loose from Nvidia’s trajectory. In Meta, this tip is a ticket.

Google is raising the heat today

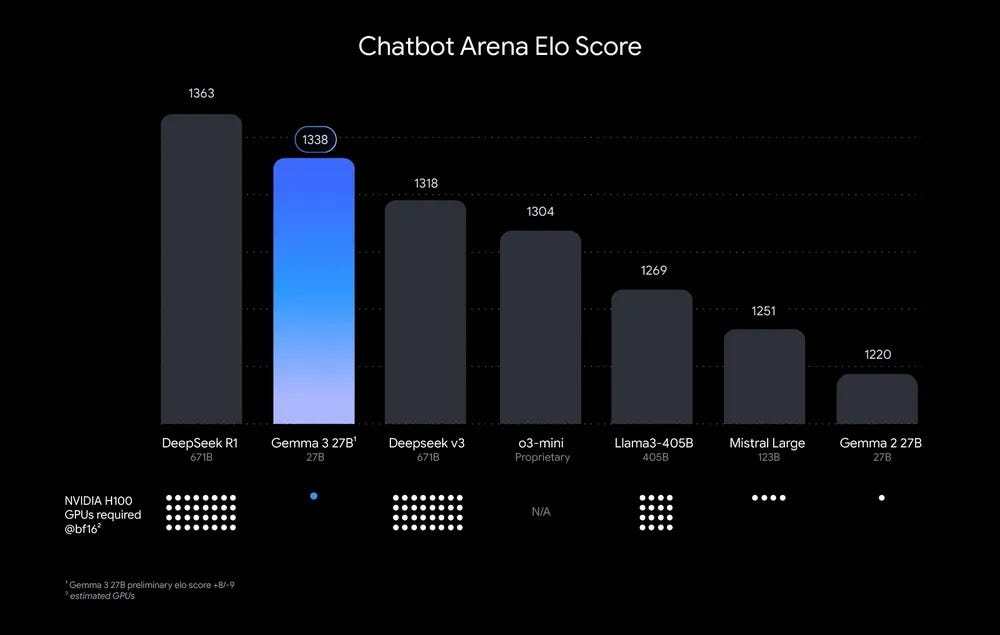

Today, Google dropped the Gemma 3. It’s a clear shot across the Nvidia bow. Deepseek’s 6R1 is reportedly required by the 34 Nvidia H100S and Meta’s Llama 3, but the Gemma 3 can run on a single H100 or TPU. With parameters ranging from 12 billion to 27 billion, it has 128k context windows and support for over 140 languages.

Early tests suggest that they outperform rivals such as the Llama-405b and Openai’s O3-Mini. Google doesn’t just show off. They’re giving points – advanced AI doesn’t have to emit billions of dollars in GPU spending. It’s faster, cheaper and more accessible for businesses.

GPU Demand Dilemma

The Gemma 3 is not just a technical milestone, it is a warning sign for Nvidia’s business model. Reports show that Deepseek’s model has historically relied on dozens of GPUs.

However, Google’s Gemma 3 achieves similar results with just one chip. This leap in efficiency suggests a future in which AI strength is not measured by GPU counts. Openai and Meta’s custom chips are part of this shift, but Google’s move will be at the forefront.

If newer models have fewer GPUs that need to match or outperform the older models, then Nvidia’s hardware demand could drop. Enterprises, startups and cloud providers could opt for slimmer setups and put pressure on Nvidia’s advantage. Not only does it build alternative chips, but it also means that Nvidia has fewer chips in the first place.

Ripple Effect: Amazon, Startups, and Leaner Models

This shift is not limited to high-tech giants. Amazon is quietly moving forward with training chips supported by its investment in humanity. This is an AI company that already uses Google’s TPU for most reasoning. Startups like Celebras have also stepped up, with specialized chips that power models such as Perplexity sonar and Mistral chatbots. This trend is heading towards efficiency rather than brute force, a threat to Nvidia’s premium margin. If Google can run Gemma 3 on one chip, why should others pay Nvidia’s hardware fleet?

Lessons from the past: Cisco warnings

History is reflected here. Cisco ruled the world of networking in the 1990s, with its routers and switches forming the backbone of the Internet. However, in the 2000s, competitors like Juniper and Arista introduced cheaper and more flexible solutions that lacked Cisco’s advantage. Cisco has adapted, but its market grip has been reduced as buyers favor cost and customization over one size empire. I feel that Nvidia has similar advantages. It may seem unwavering, but custom chips and efficient models can be Nvidia’s Juniper moments.

Nvidia’s throne is shaking

The market is feeling change. Nvidia’s shares have fallen 15% this year, but today we saw a bounce of 6%. This contrasts with large spending projects like Project Stargate’s multi-billion-dollar data center plan. All eyes will be at Nvidia’s upcoming GTC conference next week. Here, CEO Jensen Huang defends Nvidia’s position. Huang argues that their chips are still leading in training and reasoning, and the ecosystem is unparalleled. But economics is changing, and the rise of custom silicon is real. Nvidia’s premium pricing model can be at risk.

Is this a turning point for AI hardware?

This is more than just a Nvidia battle. You can get a glimpse into the future of AI hardware. As the industry moves from training to inference, efficiency is becoming a priority. Openai’s custom chips (later this year), continuous testing of Meta, and Google’s Gemma 3 launch show a shift towards independence from Nvidia. Humanity is already leaning towards Google’s TPU, and companies like Celebras have achieved status. The challengers are gaining momentum.

Conclusion

Nvidia hasn’t been released yet. Its ecosystem is still big and Huang is not wrong about its versatility. But the throne wobbles. Are you having trouble with Nvidia? Not today, but the crown is rising for grabs, and the tech giants are forging their own paths. The real question is, can nvidia keep the line, or is this the beginning of a new era of AI hardware?

It’s written on Nvidia’s wall, so we’re not the only ones looking at it. CNBC is considering what Google’s efficient “Gemma 3” model means for Nvidia’s future.

Source link