Midjourney, one of the most popular AI image generation startups, announced on Wednesday the release of its much-anticipated AI video generation model V1.

V1 is an image-to-video model that allows users to upload images or take images generated by one of the other models in Midjourney. V1 creates a set of four 5-second videos based on it. Like Midjourney’s image model, V1 is only available on Discord and only on the web at startup.

With the launch of the V1, Midjourney will compete with AI video generation models from other companies, including Openai’s Sora, Runway’s Gen 4, Adobe’s Firefly, and Google’s VEO 3. While many companies have focused on developing controllable AI video models for use in commercial settings, Midjourney has always directed its distinctive AI image models towards creative types.

The company says its AI video model has a greater goal than generating B-rolls for commercials aimed at Hollywood movies and advertising industries. In a blog post, Midjourney CEO David Holz said that AI video models are the next step to their ultimate destination, and that AI models create “real-time open-world simulation possible.”

After the AI video model, Midjourney says it plans to develop AI and real-time AI models to generate 3D renderings.

The launch of Midjourney’s V1 model comes just a week after the startup was sued by two of Hollywood’s most infamous film studios, Disney and Universal. The suit claims that images created by Midjourney’s AI image model depict studio copyrighted characters like Homer Simpson and Darth Vader.

Hollywood Studios is struggling to tackle the growing popularity of AI images and video generation models developed by Midjourney. There is growing fear that these AI tools can replace or devalue the work of creatives in their respective fields, and some media companies claim that these products are trained on copyrighted works.

Midjourney has tried to sell itself as something different from other AI images and video startups (those focused on creativity rather than immediate commercial applications), but startups cannot escape these accusations.

First, Midjourney says it charges eight times more for video generation than typical image generation. This means that subscribers will use up their monthly allocated generations much faster when creating videos over images.

At launch, the cheapest way to try out the V1 is to subscribe to Midjourney’s $10/month master plan. Subscribers to Midjourney’s $60 monthly Pro Plan and $120 monthly Mega Plan will win an unlimited video generation in the company’s slow “relax” mode. Next month, Midjourney says it will reevaluate the pricing of its video model.

The V1 comes with several custom settings that allow users to control the output of the video model.

Users can select Auto Animation Settings to move images randomly, or select a manual setting that allows text descriptions of the specific animation they want to add to the video. Users can also toggle between the amount of camera and subject movement by selecting “Low Motion” or “High Motion” in Settings.

The video generated in V1 is only 5 seconds, but users can choose to expand to 4 seconds. This means that V1 video can take up to 21 seconds.

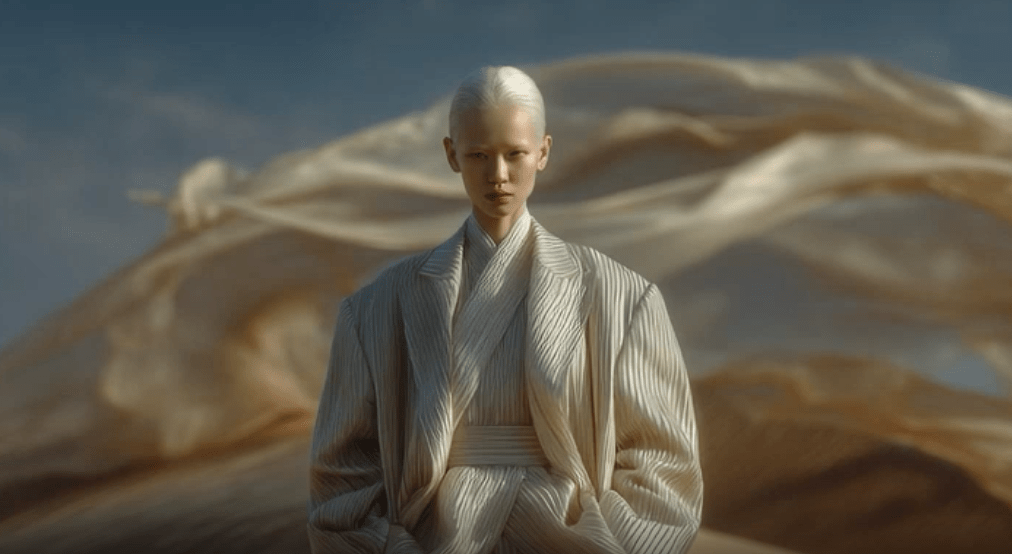

Similar to Midjourney’s AI image model, the early demos of the V1 video look somewhat otherworldly, not surreal. The initial response to V1 was positive, but it is still unclear how well it matches the other major AI video models that have been on the market for months or years.