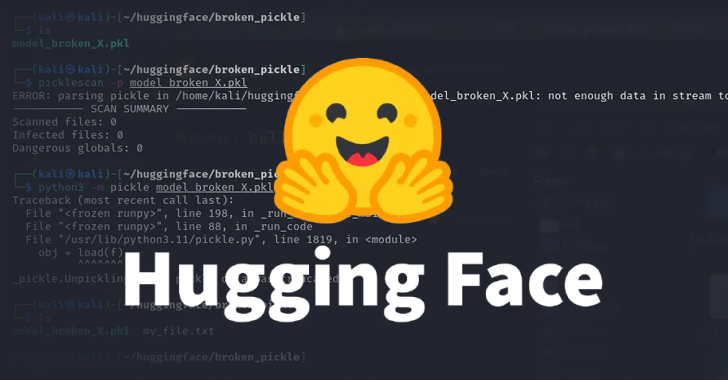

Cybersecurity researchers have discovered two malicious machine learning (ML) models of faces that utilize the unusual technique of “broken” pickle files to avoid detection.

“The pickle files extracted from the mentioned Pytorch archive revealed malicious Python content at the top of the file,” Reversinglabs researcher Karlo Zanki said in a report shared with Hacker News. “In both cases, the malicious payload was a typical platform-aware reverse shell that connected to a hard-coded IP address.”

This approach is called Nullifai. This is called Nullifai because it involves ClearCut’s attempts to avoid existing safeguards introduced to identify malicious models. The embracing face repository is listed below –

GLOCKR1/BALLR7 WHO-R-U0000/00000000

The model is thought to have more proof of concept (POC) than active supply chain attack scenarios.

The pickle serialization format common to ML model distribution has been repeatedly discovered to be a security risk, as it provides a way to load arbitrary code and run it immediately after decolorization.

The two models detected by the cybersecurity company are stored in Pytorch format. This is nothing more than a compressed pickle file. Pytorch uses ZIP format for compression by default, but I found that the identified models are compressed using the 7Z format.

As a result, this behavior allowed the model to fly under the radar, avoiding pickle scoring maliciously flagging it.

“The interesting thing about this pickle file is that the object serialization (the purpose of the pickle file) breaks right after the malicious payload is executed, causing the object to decompile to fail,” Zanki said.

Further analysis revealed that such broken pickle files could still be partially de-removed due to the inconsistencies and how de-aeration works. The open source utility was then updated to fix this bug.

“The explanation for this behavior is that the object descent is performed in turn in the pickle file,” Zanki pointed out.

“The pickle opcode is executed when it is encountered and runs until all opcode is executed or a broken instruction is encountered. For discovered models, the malicious payload at the beginning of the pickle stream is Running a model is not a run of a model because it is inserted. “Hugging Face’s existing security scan tool will detect it as unsafe.”

Source link