AI is changing everything – from the way we code to how we sell and how we protect them. But most conversations focus on AI can do, which focuses on breaking down.

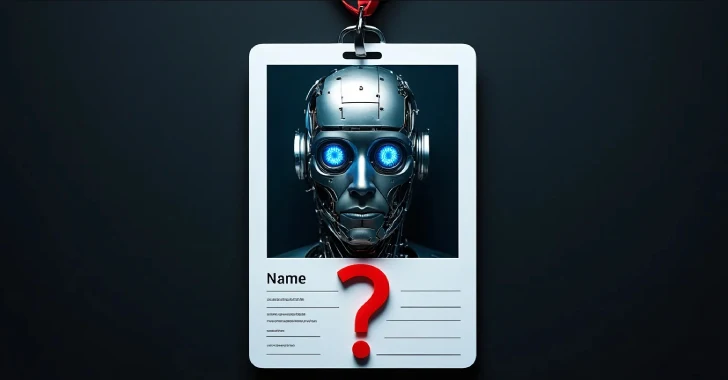

Behind all AI agents, chatbots, or automation scripts there is an increasing number of non-inhuman identities, such as API keys, service accounts, and OAuth tokens.

And here’s the problem:

🔐 They are invisible

🧠 They are strong

🚨 These are not collateral

Traditional identity security protects users. Using AI, I quietly handed over control to software that was impersonating the user. Often, there are plenty of access, few guardrails, and no oversights.

This is not theoretical. Attackers have already used these identities in the following ways:

Move horizontally through cloud infrastructure and deploy malware through an automation pipeline.

Once compromised, these identities can quietly unlock critical systems. There is no second chance to fix something invisible.

If you’re building AI tools, deploying LLMS, or integrating automation into your SaaS stack, you’re already relying on NHIS. And perhaps they are not secured. Traditional IAM tools are not built for this. A new strategy is needed – fast.

This upcoming webinar “revealing and securing the invisible identities behind AI agents” led by Astrix Security’s field CTO Jonathan Sander is not a story of another “AI hype.” It’s a wake-up call and a roadmap.

What you learn (and actually use)

The way AI agents create invisible identities creates real-world attack stories that have never made the news why traditional IAM tools can’t protect the simple and scalable ways to see these identities.

Most organizations are unaware of how much exposure is until it’s too late.

Check out this webinar

This session is essential for security leaders, CTOs, DevOps leads, and AI teams who can’t afford silent failures.

The faster you recognize the risk, the faster you can fix it.

Seats are limited. And the attacker is not waiting. Book your location now

Source link