Just a month after rolling out the QWEN2.5-OMNI-7B model, the Chinese technology giant has returned to QWEN3. This is a new family of large-scale language models that have already become a hot topic in China’s thriving open source scene.

In a blog post on Tuesday, Alibaba described QWEN3 as a major leap in inference, instructions, use of tools, and multilingual performance. The lineup includes eight models in a variety of sizes and architectures, allowing developers plenty of room to experiment, especially on edge devices such as smartphones.

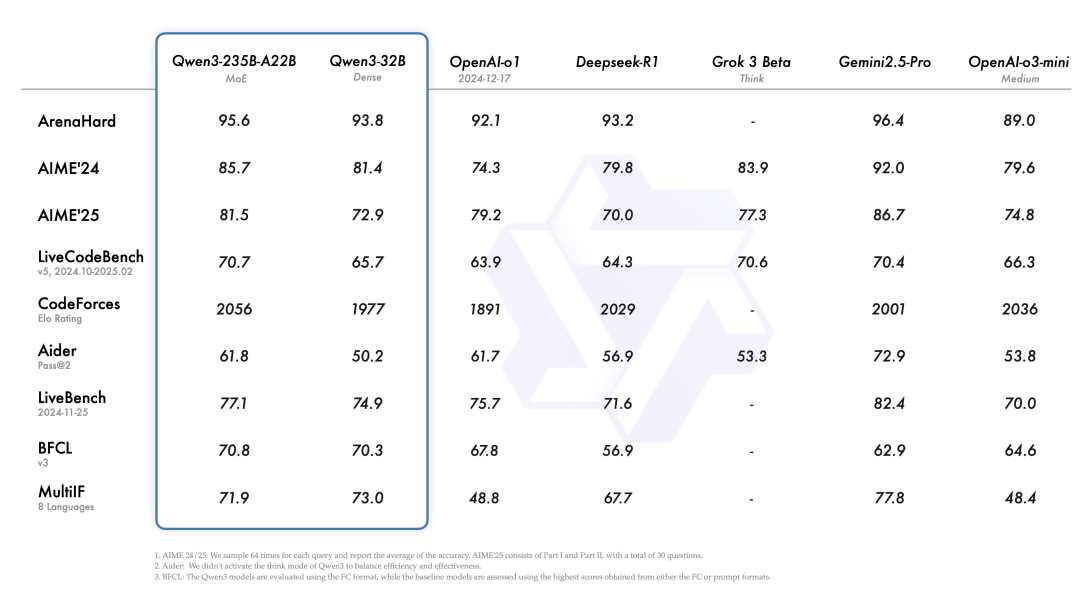

“Introducing Qwen3! We release and open-weight Qwen3, our latest large language models, including 2 MoE models and 6 dense models, ranging from 0.6B to 235B. Our flagship model, Qwen3-235B-A22B, achieves competitive results in benchmark evaluations of coding, math, general capabilities, etc., when compared to other top-tier models such as DeepSeek-R1, o1, O3-Mini, Grok-3, and Gemini-2.5-Pro,” Alibaba said in a post on X.

Introducing QWEN3!

Released QWEN3, the latest large-scale language model, which includes two MOE models and six dense models ranging from 0.6B to 235B and open weight QWEN3. The flagship model QWEN3-235B-A22B achieves competitive results with benchmark assessments on coding, mathematics, general…pic.twitter.com/jwzkjehwhc

– Qwen (@alibaba_qwen) April 28, 2025

Alibaba’s QWEN3-235B-A22B is better than the larger model in the AI benchmark

According to Alibaba, the new QWEN3 lineup includes eight models (6 concentrations and two mixtures (MOEs)) ranging from 6 billion to 235 billion parameters.

The highlight is QWEN3-235B-A22B. This is a claimed claim model on par with top tier models such as Deepseek-R1, O1, O3-Mini, GROK-3, and GEMINI-2.5-PRO across benchmarks in coding, mathematics and general reasoning.

What’s noteworthy is how well the small model punches weight. The QWEN3-30B-A3B defeats the QWQ-32B, which uses 10x active parameters despite being a compact MOE. Even the lightweight model, the QWEN3-4B, matches the performance of the much larger QWEN2.5-72B-Instruct.

QWEN3 also marks an entry into what is called Alibaba’s “hybrid reasoning.” To be clear, the model can switch between slower, more intentional modes suitable for tasks like writing code, and faster modes suitable for general replies. This dual mode setup can make your model more versatile without compromising speed or quality.

One standout in the series is the QWEN3-235B-A22B MOE model. Alibaba, a key factor for businesses looking at AI spending, will significantly reduce deployment costs.

Developers can get QWEN3 for free by hugging their faces, Github and Alibaba Cloud. This model also powers Alibaba’s own AI assistant, Quark.

Raising interests in China’s AI push

Industry watchers see QWEN3 as more than just a high-tech release. It’s a shot across the bows of other players in China and the US

Wei Sun, AI analyst at CounterPoint Research, told CNBC that the model series stands out not only for performance, but also for its multilingual reach (supports 119 languages and dialects), hybrid reasoning capabilities, and open access.

Timing is important. Earlier this year, Deepseek made waves with the R1 model. This is a move that sparked under China’s open source AI movement. Alibaba seems determined to maintain that momentum.

US-based analyst Ray Wang focuses on the technological competition between the US and China, and said QWEN3 highlights how far China’s labs have come, even as restrictions on US technology exports are increasing.

“The release of Alibaba’s Qwen 3 Series further highlights the strong ability of Chinese labs to develop highly competitive, innovative and open source models despite increasing pressure from strengthening US export controls,” Wang told CNBC.

Alibaba claims that its Qwen model has already won over 300 million downloads, and that over 100,000 spinoff models created by developers on Face. Wang believes that QWEN3 can push these numbers higher. Also, the notch behind premium products such as Openai’s O3 and O4-Mini can even win a spot as the best open source model on the market today.

Other high-tech players in China are often seen. Baidu is reportedly shifting its focus to open source AI, and Deepseek is already preparing to launch a successor to R1.

The king summed up: “The gap between the American and Chinese AI labs is no longer as wide as they used to be. They’re probably months, maybe weeks.

🚀Want to share the story?

Submit your stories to TechStartUps.com in front of thousands of founders, investors, PE companies, tech executives, decision makers and tech leaders.

Please attract attention