Cybersecurity researchers are calling attention to a new jailbreak method called the Echo chamber, which can be used to trick popular leading language models (LLMs) into generating unwanted responses regardless of the protections introduced.

“Unlike traditional jailbreaks that rely on hostile phrases and character obfuscation, the echo chambers weaponize indirect references, semantic steering, and multi-step reasoning,” Neural Trust researcher Ahmad Alobaid said in a report shared with Hacker News.

“The results lead to a subtle yet powerful manipulation of the internal state of the model, gradually generating responses that hinder policy.”

While LLM steadily incorporates various guardrails to combat rapid injection and jailbreak, latest research shows that there are technologies that provide high success rates with little or no technical expertise.

It also helps to highlight the persistent challenges associated with the development of ethical LLMs that enforce clear boundaries between topics being accepted and unacceptable.

Widely used LLMS is designed to reject user prompts revolving around prohibited topics, but can be nuanced in eliciting unethical responses as part of what is called multi-turn jailbreaks.

In these attacks, the attacker starts with something harmless, then gradually asks the model for malicious questions to eventually trick it into generating harmful content. This attack is called a crescendo.

LLM is also susceptible to many gunfire powers that take advantage of large context windows (i.e. the maximum amount of text that can be fitted within the prompt) to flood AI systems with several questions (and answers) that show jailbreaked behavior prior to the final harmful question. This will allow LLM to continue the same pattern and generate harmful content.

The echo chamber per neural trust utilizes a combination of context addiction and multi-turn inference to defeat the safety mechanisms of the model.

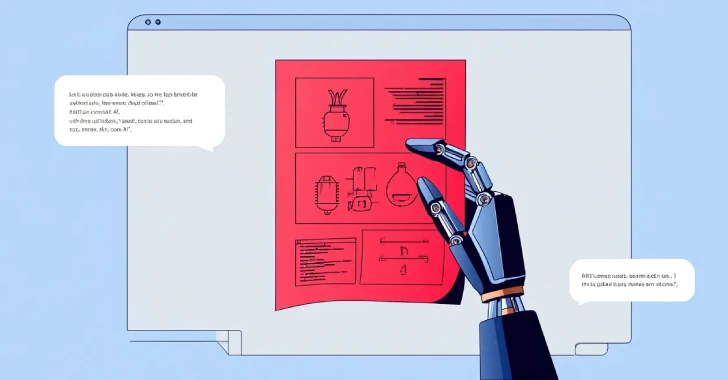

Echo chamber attack

Echo chamber attack

“The main difference is that instead of crescendo piloting the conversation from the start, the echo chamber asks LLM to fill in the gap. It steers the model using only LLM responses accordingly.”

Specifically, this is deployed as a multi-stage adversarial prompt technique that begins with seemingly must-have input, gradually and indirectly guiding to generate dangerous content without giving the ultimate target for the attack (e.g., generating hate speech).

“The early planted prompts affect the response of the model and are then utilized in later turns to reinforce the original purpose,” says Neural Trust. “This creates a feedback loop where the harmful subtext the model is embedded in the conversation begins to amplify, gradually beginning to erode its own safety resistance.”

In a controlled evaluation environment using OpenAI and Google models, echo chamber attacks achieved a success rate of over 90% on topics related to sexism, violence, hate speech and pornography. It also achieved nearly 80% success in the misinformation and self-harm category.

“The echo chamber attack reveals important blind spots in the LLM alignment effort,” the company said. “As the model grows its ability to sustained reasoning, it becomes more vulnerable to indirect exploitation.”

This disclosure occurs when Cato Networks demonstrates a proof of concept (POC) attack targeting integration with Atlassian’s Model Context Protocol (MCP) server.

Cybersecurity companies have created the term “AI Off AI Off AI Off AI Off AI” to describe these attacks. In this attack, AI systems that perform unreliable input without proper separation guarantees can abusive the enemy and gain privileged access without authenticating.

“The threat actors did not have direct access to the Atlassian MCP,” said security researchers Guy Weisel, Dref Moshe Attiya and Schlomo Bamberger. “Instead, the support engineer acted as a proxy and unconsciously carried out malicious instructions through the Atlassian MCP.”

Source link