On Tuesday, Meta announced Llamafirewall, an open source framework designed to protect artificial intelligence (AI) systems against new cyber risks such as rapid injection, jailbreak and unstable code.

According to the company, the framework incorporates three guardrails, including PromptGuard 2, Agent Alignment Check and Codeshield.

PromptGuard 2 is designed to detect direct jailbreak and prompt injection attempts in real time, while agent alignment checks can inspect agent inferences that may be target hijacking and indirect rapid injection scenarios.

Codeshield refers to an online static analysis engine that attempts to prevent AI agents from generating unstable or dangerous code.

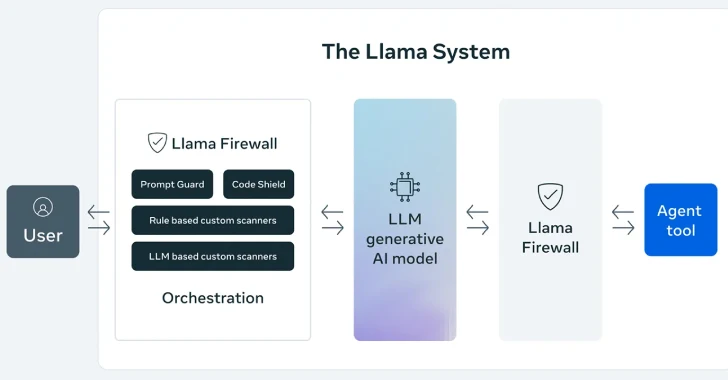

“Llamafirewall is built to act as a flexible, real-time guardrail framework for protecting applications with LLM,” the company said in its GitHub description of the project.

“Its architecture is modular, allowing security teams and developers to configure layered defenses ranging from raw input intake to final output actions across simple chat models and complex autonomous agents.”

Alongside Llamafirewall, Meta utilized updated versions of Llamaguard and Cyberseceval to better detect various common types of violation content, each measuring the defense cybersecurity capabilities of AI systems.

Cyberseceval 4 also includes a new benchmark called Autopatchbench. Autopatchbench is designed to assess the capabilities of large-scale language model (LLM) agents and automatically repairs a wide range of C/C++ vulnerabilities identified by an approach known as AI-driven patching.

“Autopatchbench provides a standardized assessment framework for assessing the effectiveness of AI-assisted vulnerability remediation tools,” the company said. “This benchmark is intended to promote a comprehensive understanding of the capabilities and limitations of various AI-driven approaches to fixing fuzzing-based bugs.”

Finally, Meta has launched a new program called Llama to help partner organizations and AI developers shut down their AI solutions to address certain security challenges, including accessing open, early access, and closed AI solutions to detect AI-generated content used in fraud, fraud, and phishing attacks.

The announcement is to enable WhatsApp to preview a new technology called private processing, allowing users to take advantage of AI capabilities without compromising privacy by offloading requests into a secure, sensitive environment.

“We will continue to work with the security community to audit and improve our architecture and work with researchers to build and enhance private processing before launching it in our products,” Meta said.

Source link