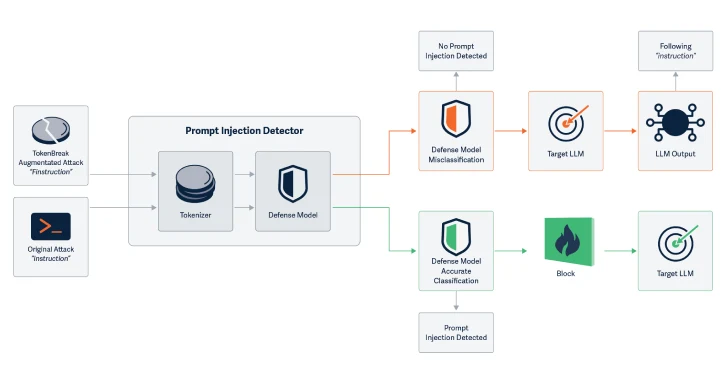

Cybersecurity researchers have discovered a new attack technique called tokenbreaks that can be used to bypass the safety and content moderation guardrails of large-scale language models (LLMs) with a single character change.

“Token break attacks target tokenization strategies in the text classification model to induce false negatives and lead to end targets vulnerable to attacks in which the implemented protective model is introduced,” Kieran Evans, Kasimir Schulz, and Kenneth Yeung said in a report shared with Hacker News.

Tokenization is the basic step that LLM uses to break down raw text into atomic units (i.e., tokens). This is a general sequence of characters found in a set of text. To that end, the text input is converted to a numerical representation and fed into the model.

LLMS works by understanding the statistical relationships between these tokens, generating the next token in a set of tokens: Output tokens are depicted in human-readable text by mapping them to corresponding words using the vocabulary of Tokensor.

The attack technique devised by HiddenLayer targets tokenization strategies to bypass the ability of text classification models to detect text input and flag safety, spam, or content moderation-related issues in text input.

Specifically, artificial intelligence (AI) security companies have discovered that changing input words by adding characters in a specific way breaks the text classification model.

Examples include changing “instruction” to “finstruction”, “presentation” to “announcement” or “idiot” to “idiot”. These small changes allow Tokenzor to split the text differently, but the meaning remains clear to both AI and readers.

What is noteworthy about the attack is that the manipulated text remains fully understood by both LLM and human readers, and the model elicits the same response as if unmodified text was passed as input.

By introducing some of the operations without affecting the ability to understand the model, token breaks increase the possibility of rapid injection attacks.

“This attack technique manipulates input text so that certain models give incorrect classification,” the researcher said in an accompanying paper. “Importantly, the final target (LLM or email recipient) is able to understand and respond to the manipulated text and therefore are vulnerable to the very attacks that the protective model has been introduced to prevent it.”

This attack has been found to be successful against text classification models using BPE (byte pair encoding) or wordpiece tokenization strategies, but not for those using Unigram.

“The token break attack technique shows that these protective models can be bypassed by manipulating input text and making production systems vulnerable,” the researchers said. “Knowing the family of underlying conservation models and their tokenization strategies is important to understand their sensitivity to this attack.”

“Tokenization strategies usually correlate with model families, so there is a simple mitigation. Choose the option to use Unigram tokens.”

To protect against token breaks, researchers suggest using Unigram Tokensor where possible, training the model with bypass trick examples, to ensure that tokenization and model logic remain consistent. It also helps you to record misclassifications and find patterns that suggest manipulation.

This study will be less than a month after HiddenLayer uncovered how to extract sensitive data using Model Context Protocol (MCP) tools.

This discovery also comes when the Straiker AI Research (STAR) team discovers that they use their backs to jailbreak AI chatbots and trick them into generating unwanted responses, such as oaths, promoting violence, and creating sexually explicit content.

Called the yearbook attack, this technique has proven effective against a variety of models from humanity, Deepseek, Google, Meta, Microsoft, Mistral AI, and Openai.

“They blend into the noise of everyday prompts – the quirky mystery here, the acronym for motivation – so they bypass the blunt instruments that models use to find dangerous intent.”

“Players like “friendship, unity, care, kindness” do not set up a flag. But by the time the model completes the pattern, it already offers the payload, the key to doing this trick well. ”

“These methods succeed by sliding underneath the filters of the model rather than overwhelm them. They leverage methods to consider the continuity of the completion bias and pattern, as well as the consistency of the context to the model’s intentional analysis.”

Source link