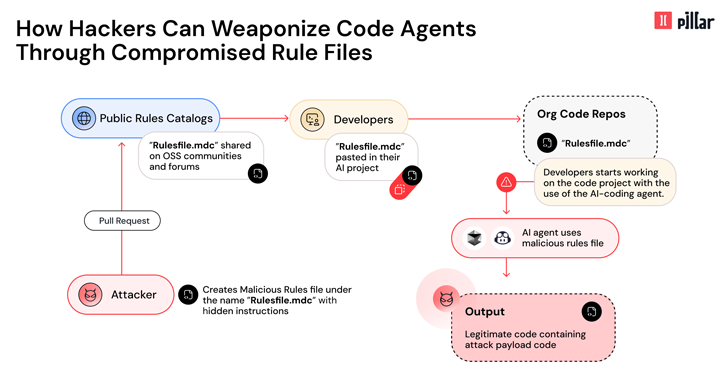

Cybersecurity researchers have revealed details of new supply chain attack vector file backdoors that will affect AI-powered code editors such as GitHub Copilot and Cursor.

“This technique allows hackers to quietly compromise AI-generated code by injecting malicious instructions hidden in seemingly innocent configuration files used by Cursor and Github Copilot,” said CTO Ziv Karliner, co-founder of Pillar Security, in a technical report shared with Hacker News.

“By leveraging hidden Unicode characters and sophisticated evasion techniques in models facing order payloads, threat actors can manipulate AI to insert malicious code that bypasses typical code reviews.”

The attack vector is noteworthy in the fact that malicious code can quietly propagate throughout the project, pose supply chain risks.

The core of the attack resides in the rules files that AI agents use to guide behavior, which helps users define the best coding practices and project architecture.

Specifically, AI tools generate code that contains security vulnerabilities or backdoors, involving embedding carefully crafted prompts in seemingly benign rules files. In other words, poisoned rules tweak AI to create malicious code.

This can be achieved by using zero-width joiners, bidirectional text markers, and other invisible characters to hide malicious instructions, and leveraging the AI’s ability to interpret natural language and generate vulnerable code through vulnerable patterns across ethical and safety constraints.

Following responsible disclosures in late February and March 2024, both Cursor and GIHUB state that users are responsible for reviewing and accepting proposals generated by the tool.

“The ‘rule file backdoor’ represents a serious risk by weaponizing AI itself as an attack vector, effectively transforming the developer’s most trusted assistant into an unconscious accomplice, and can affect millions of end users through compromised software,” Karliner said.

“When addiction rules files are incorporated into the project repository, they will affect all future code generation sessions by team members. Additionally, malicious instructions will withstand project branching and create vectors of supply chain attacks that can affect downstream dependencies and end users.”

Source link