The unknown threat actor has been observing V0 weaponizing Vercel’s generative artificial intelligence (AI) tool, and has designed fake sign-in pages that impersonate legal counterparts.

“This observation illustrates a new evolution in weaponization of generated AI by threat actors that demonstrated their ability to generate functional phishing sites from simple text prompts.”

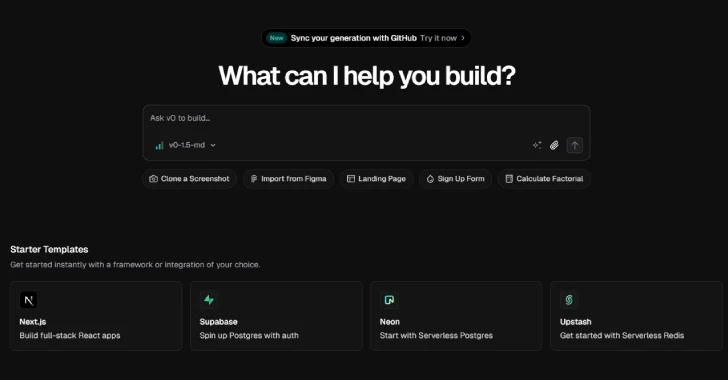

V0 is an AI-driven offering from Vercel that allows users to create basic landing pages and full stack apps using natural language prompts.

The Identity Services Provider said it observed using the technology to create compelling replicas of login pages associated with multiple brands, including their own unnamed customers. Following responsible disclosure, Vercel has blocked access to these phishing sites.

Threat actors behind the campaign have also been found to exploit trust related to the developer platform and host other resources, perhaps the company’s logo that impersonates Vercel’s infrastructure, to avoid detection.

Unlike traditional phishing kits, which require some effort to set up, tools like V0 and open source clones of GitHub allow attackers to spin up fake pages simply by typing a prompt. It’s faster, easier, and no coding skills required. This makes it easier for even low-skilled threat actors to build compelling phishing sites at scale.

“The observed activities confirm that today’s threat actors are actively experimenting and weaponizing key genai tools to streamline and enhance their phishing capabilities,” the researchers said.

“Platforms like Vercel’s V0.Dev allow emerging threat actors to quickly generate high-quality, deceptive phishing pages, increasing the speed and scale of operations.”

Development arises as development leverages illegal language models (LLM) to support criminal activity and continues to build uncensored versions of these models, explicitly designed for illegal purposes. One such LLM that gained popularity in the cybercrime landscape is White Rabbitneo, promoting its status as “the uncensored AI model of the (developed) Secops team.”

“Cybercriminals are increasingly drawn to violating uncensored LLMs, cybercrime-designed LLMS, and legal LLMs,” said Jaeson Schultz, a researcher at Cisco Talos.

“Uncensored LLM is an unconforming model that operates without guardrail constraints. These systems are sensitive, controversial or potentially harmful output in response to user prompts. As a result, uncensored LLM is perfectly suited for cybercrime use.”

This fits in with the greater change we see. Fishing is more driven by AI than before. Fake emails, cloned voices, and even deepfake videos have appeared in social engineering attacks. These tools help attackers scale up quickly and turn small scams into large, automated campaigns. It’s no longer just cheating on users. It is to build an entire system of deception.

Source link