Artificial intelligence can enhance research into nuclear molten salt chemistry by quickly and efficiently predicting experimental results and ultimately reducing the time and costs associated with traditional experimental methods.

Imagine stepping into the lab and knowing the results of the experiment before you try. What about the results of the 100 experiments? thousand? How will it affect your decisions when developing your product? Most of us are familiar with the ability of AI (AI) to generate realistic photos, videos and text chats, but we can take advantage of this power in other ways. By generating new experimental results.

This predictive power is widely accepted in fields such as pharmaceuticals and battery research, but advances in nuclear molten salt chemistry still present an important opportunity for AI integration. Electrochemical experiments of molten salts tend to be very time-consuming and expensive, require multiple expensive equipment, and require extensive knowledge to perform accurately. Now, imagine the investment needed to test hundreds or thousands of different salt compositions and elements.

This is where AI intervene. Using past experimental data, AI models can be developed that can rapidly predict different electrochemical responses for a variety of experimental configurations. And the best part? Once the model has been trained and validated, the response can be generated within seconds rather than days. This provides a convenient way for researchers to estimate countless results before committing to a critical investment in physical experiments, leading to more informed and efficient experimental choices.

The engine behind prediction

To be confident in your predictions, you need to understand exactly how these predictions are generated. Although machine learning is a vast field, its approaches can generally be divided into three different types: supervisor, unsupervised, and reinforcement learning. The current research being carried out is closely monitored learning, but each method is slightly different, so it is important to know this distinction. Due to monitored learning, inputs, also known as features, are labeled and come with targets. This is what you’re trying to predict. During training, the monitored network generally follows four specific steps:

Forward Propagation – Moves data through the model and returns the predicted output. It is also sometimes called a “forward pass.” Loss Calculation – Compare prediction results from the forward pass with actual results. Mean-square errors are commonly used for monitored learning. Backpropagation – Calculates the error slope of the loss function to determine which parameters need to be adjusted. Optimization – Update internal parameters to minimize total losses via algorithms such as Adam and gradient descent.

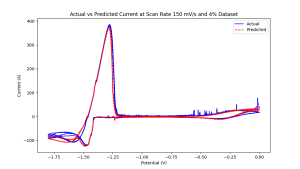

These four steps are repeated, with each iteration being called an epoch. Given adequate times, quality training data, and good models, the dynamics of the system are very well approximated. This approach creates data-driven models that differ from traditional physics-based models, as they learn to approximate purely underlying physical behaviors from historical data, rather than from simulations of physics equations. For example, Figure 1 shows the true and predicted circular voltammogram responses of neural networks trained at 4% with LICL-KCL molten salt data at 798 K.

4% by weight uranium in 798 k LiCl-KCL molten salt

The core of monitored learning is a four-step process, and there are many ways to implement this. The easiest way is through a multi-layer PERCEPTRON network (MLP). Here, information flows back and forth in one direction, with the final output being the actual prediction. More complex cases occur with sequential or time series data, with recurrent neural networks (RNNs) often glowing.

Unlike MLP, these networks can loop back information to itself, process sequences of data, and effectively “remember” what happened previously. This makes it ideal for tasks where the order of information is important. Common architectures include simple RNNs, long-term long-term memory neural networks (LSTMs), and gate regeneration unit neural networks (GRUs). Architectural choices are primarily determined by the type of data being processed and the actual goals of the project itself.

How VCUs practice theory

At VCU, our team is actively embracing this AI-driven approach, leveraging powerful computational resources and advanced coding libraries to develop and train multiple neural network models with real experimental data. However, an important hurdle in this specialist field is the inherent rarity of high-quality electrochemical data. This led to designing key parts of the research. We decided to investigate how models can effectively learn and generalize from a limited dataset.

To investigate this, we trained multiple neural networks of different architectures using historical experimental data from different experimental configurations. This diverse data is used to train multiple neural network architectures with different dataset sizes, allowing you to determine the minimum data required to produce reliable results as well as which models perform optimally with rare information. Furthermore, we challenged networks with incomplete inputs to see if accurate electrochemical responses could be generated from non-ideal information. Initial results consistently showed that neural networks can better approximate electrochemical responses, even with more limited or incomplete data.

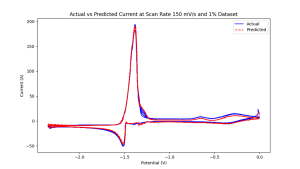

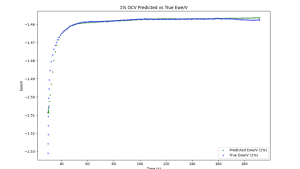

Based on these initial findings, comprehensive electrochemical simulations of UCL3 were performed at two different weight concentrations, and several different AI models were deployed to predict the full range of electrochemical responses. The initial tests provided excellent results for both periodic voltammetry and open circuit potential data. Figure 2 shows one such example of 1% UCL3 in the LICL-KCL molten salt, showing how accurately the model can approximate these electrochemical responses.

748 k lic -kcl molten salt

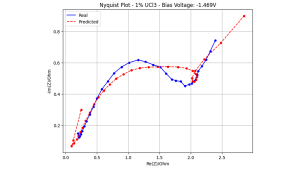

Despite this success in cyclic voltammetry and open circuit possibilities, another important electrochemical technique, electrochemical impedance spectroscopy, still presents several challenges. Figure 3 illustrates this point by showing a true generated Nyquist plot by showing a non-ideal match between predicted and actual data.

Spectroscopic data of 1 wt% uranium in LICL-KCL molten salts at 748 K.

Open the path to future innovation

There have been quite a bit of progress, but there is still a lot to do. Our AI models show immense promise in predicting the response of many electrochemical technologies, such as periodic voltammetry and open circuit possibilities, but other areas remain active frontiers. Electrochemical impedance spectroscopy presents a major challenge due to its complex, frequency-dependent reactions. Learning predictions requires more sophisticated modeling and extensive high-quality training data. By expanding prediction capabilities with this wide range of methods, the aim is to further reduce the need for costly and time-consuming physics experiments, allowing researchers to explore vast digital parameter spaces.

In the future, we would like to extend this prediction function to a range beyond a single elemental molten salt system. By tackling the complexity of multicomponent molten salts, we can unlock a more complete understanding of the complex interactions occurring within these critical systems, and extract important system parameters from them, such as thermodynamics and transport properties.

Furthermore, we would like to extend this predictive power towards other important technologies such as laser-induced decay spectroscopy. This method holds immeasurable promise in the world of nuclear molten salts and is a major target for AI integration because it can provide rapid insights into elemental composition.

Ultimately, our work is attempting to dramatically accelerate our fundamental understanding and development of molten salt technology, enforcing researchers to quickly and efficiently identify the most influential experiments and accelerate real-world applications.

reference

A. Zhang et al. , Dive Into Deep Learning, Cambridge University Press (2023). S. Rakhshan Pouri, “Comparative study of diffusion models and artificial neurointelligence regarding electrochemical processes of U and ZR dissolution in LICL-KCL shared salts,” https://doi.org/10.25772/60S6-Ty60. TP Lillicrap et al. , “Backpropagation and the Brain,” Nat Rev Neurosci 21 6, 335, Nature Publishing Group (2020); https://doi.org/10.1038/S41583-020-0277-3.

This article will also be featured in the 23rd edition of Quarterly Publication.

Source link