The Achilles Project will help organizations transform EU AI law principles into lighter, clearer and safer machine learning across domains.

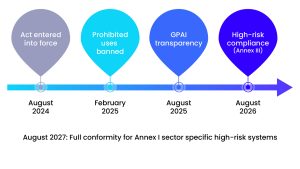

The EU AI law is currently in countdown mode. The initial ban and transparency obligations will come into effect in 2025 and early August, respectively, with the full obligation of the high-risk system expected to arrive in August 2026 (one year behind those in Appendix I). Achilles, a €900,000 Horizon Europe project launched last November, was formed to help innovators overcome their compliance gaps without sacrificing performance or sustainability.

The first article in Innovation Platform Issue 21 sketched an human-centered, energy-efficient machine learning (ML) approach led by Amvition: Anterid Regal and ethical “compass.” Eight months later, the interdisciplinary consortium is moving from vision to execution.

Two legal and ethical landmarks in deliverables: D4.1 Legal & Ethical Mapping and D4.4 Ethical Guidelines, turning hundreds of legal pages into useful practical recommendations. After a round of technical requirements and a round of legal workshops, four pilot use cases have improved the statement of issue and evaluation framework. The Achilles Integrated Development Environment (IDE) is beginning to take shape, and we promise easier and responsible AI development with transparent documents that are essential for compliance and audit workflows.

Real-world use cases

Achilles is tested through four pilots, spanning healthcare, security, creativity and quality control.

“We are pleased to announce that Montaser AWAL, research director at IDNOW, has announced that: “Achilles represents a major step forward in building privacy and regulatory compliant AI models. Building reliable and accurate AI models for identity verification requires less real data to improve the quality and robustness of the algorithm.”

Marco Cuomo of Cuomo IT Consulting said: “Achilles offers a set of tools and reusable frameworks that allow pharma-driven, AI-based projects to focus on domain-specific expertise.

Nunogonsalbu of the Institute of Systems and Robotics at Coimbra University said:

From the rulebook to reality

EU AI law required a strict legal framework early in the project, by guiding design options across Achilles and his use cases. The artifact “D4.1 Legal & Ethical Mapping” arranges the ACILLES IDE and verification pilot with their respective relevant norms (AI ACT, GDPR, Data Act, Medical Device Regulation). Companion’s artifact, “D4.4 Ethics Guidelines,” turns its map into a checklist, consent template, and bias audit script.

D4.1 presents the first legal analysis of European and international legal and ethical frameworks related to the project. In addition to focusing on fundamental rights, AI regulations (focused on EU AI law) and GDPR-based privacy and data protection, we also look at data governance (data, data governance law, and general European data space), information association services (digital services and digital services law and digital market law), NIS2 Directive, EU Cybersive, EU Cybersive, eu Cybersecuity, and broader European legislative instruments (data governance law, general data space). Sector-specific legal requirements (Medical Device Regulations and Intellectual Property Act). Ethical considerations conclude the analysis, including the importance of informed consent for study participants, the accuracy challenges associated with facial recognition and validation, algorithm bias, hallucinations of generative AI, and broader concerns about reliability. Together, these elements provide partners with an initial checklist of “what applies and why” that can be refined as technical details emerge during the project.

To ensure that Paper Rules survives their initial interactions with AI researchers and engineers, Ku Leuven’s CITIP team conducted an internal workshop in June 2025. Each pilot completed a comprehensive legal survey and was able to delve into specific decisions and gaps. The first workshop was an internal event limited to project partners, providing the opportunity to ask questions and better define the applicability of various legal frameworks and requirements. The workshop results directly inform the iterations of the following use case definitions and support the pilot execution phase: Similar workshops, including public workshops, continue throughout the project.

Although the identified risk levels may change as pilot design matures, we share here the tentative views of AI laws per use case.

The Achilles IDE itself is considered an AI system of limited risk under the AI Act. This means that users need to notify users that they are interacting with software rather than humans. Like pilots, GDPR is also applied whenever a project processes personal data, especially biometric or health data. Because these data are classified as “special categories,” they are only allowed under certain exceptions, such as the explicit consent or use of scientific research, and may trigger a required data protection impact assessment (DPIA).

Although the initial analysis suggests that DA, DGA, DSA, and DMA may be less relevant to the project, the cybersecurity requirements of the EU Cyber Resilience Act may be applicable, particularly in healthcare use cases. Finally, ethical considerations such as algorithm bias, hallucination, automation bias, and overall reliability should be addressed carefully. These concerns will become even more refined and integrated as the project progresses, and more information will become available.

The project follows an iterative compliance loop that incorporates legal, ethical and technical requirements throughout the AI development lifecycle. The loop consists of four phases.

Map, identify and analyze applicable norms, obligations, and ethics guardrails. With the support of Achilles IDE, we design and build trustworthy, transparent, and compliant AI systems. Perform pilot tests in real-world settings and evaluate technical performance and compliance outcomes with validation through measurable KPIs. Improve and update legal and ethical mappings, upgrade support tools, and restart loops based on pilot feedback and development.

Compliance is now easier: Achilles Ide

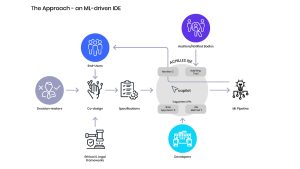

Today, teams aiming to build EU-compliant AI will need to juggle regulations, spreadsheets and 300 pages of PDF. The Achilles Integrated Development Environment (IDE) combines specifications, code, documentation, and evidence in a single workspace driven by a specification first approach. This paradigm brings the rigour of well-documented software engineering. Starting with business, legal and ethical requirements, the IDE will scaffold code and proof and guide you using a powerful co-pilot.

Achilles Ide serves as the foundation for integrating research findings in a reliable AI space into a consistent, compliant, and efficient development pipeline.

How it works – Hypothetical Project

1. Define project goals and involving as many stakeholders as possible through a set of (value-sensitive) co-design best practices, processes and tools.

Based on project descriptions and specifications, IDE Copilot is recommended at various levels of the project’s lifecycle, from compliance checklists to technical tools (such as specific bias auditing methods applied to medical images). The framework’s innovative standard operating procedure language (SOP LANG) enables flexible, controllable workflows designed for human and AI agents to collaborate. Additionally, all managers and developer decisions are documented for greater transparency. It monitors training and supports transparent, semi-automated documents such as model cards. After deployment, the system continues to monitor for drift and potential performance deterioration that could cause the need for retran. It can export evidence based on semi-automated documents, enabling a seamless audit trajectory, effectively filling the gap between decision makers, developers, end users, and informed organizations.

Looking ahead

As the project approaches completion of the first year, the use cases conclude with pilot definitions that include an assessment framework for assessing the impact of Achilles on workflows. Technical work has reached full speed with various support toolkits (bias, description, robustness, etc.) and the implementation of the Achilles IDE.

Interdisciplinary alignment workshops are important, new events are planned to accompany ongoing research, with some events open to external participants. These sessions explore topics such as explanability, human surveillance, bias mitigation, and compliance verification.

In the background, Achilles is partnering with the remaining CL4-2024-DATA-01-01. “AI-driven data manipulation and compliance technology projects: Achieve, Identification, and Data Patch.” The goal is to leverage collaboration through collaborative workshops, shared open source tools, and mutual births.

The interests are clear. By the time AI laws are fully enforceable, Achilles aims to prove that trustworthy, green AI is not a bureaucratic burden, but a competitive advantage for European innovators.

Disclaimer:

The project was conducted by Horizon Europe Research in the European Union. Innovation program based on Grant Agreement No. 101189689.

Innovation program based on Grant Agreement No. 101189689.

This article will also be featured in the 23rd edition of Quarterly Publication.

Source link