Microsoft has revealed details of a new side-channel attack targeting remote language models. Under certain circumstances, this attack could allow a passive attacker with the ability to observe network traffic to gather details about a model’s conversation topics despite cryptographic protection.

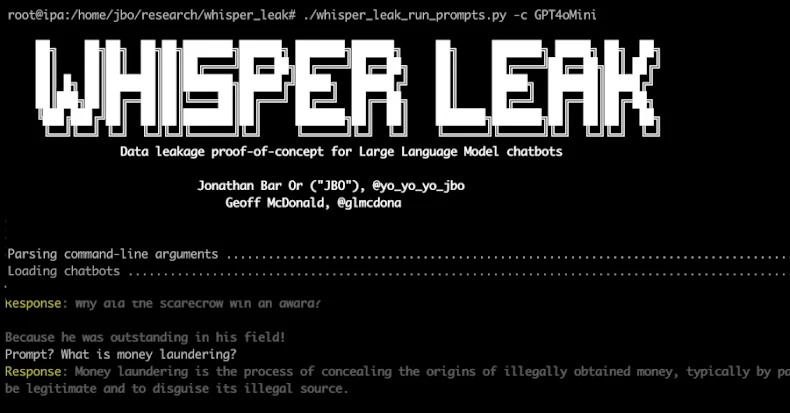

The company noted that this leakage of data exchanged between humans and language models in streaming mode could pose a significant risk to the privacy of user and corporate communications. This attack is codenamed “Whisper Leak.”

“A cyber attacker in a position to observe encrypted traffic (for example, a nation-state attacker at the Internet service provider layer, someone on a local network, or someone connected to the same Wi-Fi router) could use this cyber attack to infer whether a user’s prompts are about a particular topic,” said security researchers Jonathan Bar Or and Geoff McDonald, and the Microsoft Defender security research team.

In other words, this attack allows an attacker to observe the encrypted TLS traffic between the user and the LLM service, extract packet sizes and timing sequences, and use a trained classifier to infer whether the topic of the conversation matches a category of sensitive interest.

Model streaming for large-scale language models (LLMs) is a technique that allows models to receive incremental data as they produce responses, instead of waiting for the entire output to be computed. This is an important feedback mechanism because certain responses may take time depending on the complexity of the prompt or task.

The latest technology demonstrated by Microsoft is important. In particular, it works despite the fact that the communication with the artificial intelligence (AI) chatbot is encrypted with HTTPS, so the content of the exchange remains secure and cannot be tampered with.

Many side-channel attacks have been devised against LLM in recent years. This includes the ability to infer the length of individual plaintext tokens from the size of encrypted packets in streaming model responses, and the ability to perform input theft (also known as InputSnatch) by exploiting timing differences introduced by caching in LLM inference.

According to Microsoft, Whisper Leak builds on these findings by investigating the possibility that “the sequence of encrypted packet sizes and interarrivals in a streaming language model’s response contains enough information to classify the topic of the initial prompt, even when the response is streamed as a group of tokens.”

To test this hypothesis, the Windows maker said it used three different machine learning models: LightGBM, Bi-LSTM, and BERT to train a binary classifier as a proof of concept that can distinguish between specific topic prompts and the rest (i.e., noise).

As a result, we found that many models from Mistral, xAI, DeepSeek, and OpenAI achieved scores above 98%, allowing an attacker monitoring random conversations with a chatbot to reliably flag that particular topic.

“Government agencies and internet service providers monitoring traffic to popular AI chatbots could reliably identify users asking questions about specific sensitive topics, such as money laundering, political dissent, or other targets, even if all traffic is encrypted,” Microsoft said.

whisper leak attack pipeline

whisper leak attack pipeline

To make matters worse, researchers found that Whisper Leak’s effectiveness increases as attackers collect more training samples over time, potentially turning it into a real threat. Following responsible disclosure, OpenAI, Mistral, Microsoft, and xAI have all introduced mitigations to combat the risks.

“More sophisticated attack models, combined with the richer patterns available in multi-turn conversations and multiple conversations from the same user, mean that cyber attackers with the patience and resources may be able to achieve higher success rates than our initial results suggest.”

One effective countermeasure devised by OpenAI, Microsoft, and Mistral is to add a “random sequence of variable-length text” to each response. This masks the length of each token and invalidates the side channel argument.

Microsoft also recommends that users concerned about privacy when talking to AI providers avoid discussing sensitive topics when using untrusted networks, utilize a VPN as an additional layer of protection, use LLM’s non-streaming model, and switch to providers that have implemented mitigations.

This disclosure covers eight open-weight LLMs: Alibaba (Qwen3-32B), DeepSeek (v3.1), Google (Gemma 3-1B-IT), Meta (Llama 3.3-70B-Instruct), Microsoft (Phi-4), Mistral (Large-2, aka Large-Instruct-2047), OpenAI (GPT-OSS-20b), and Zhipu. This will be done as a new evaluation. We found that the AI (GLM 4.5-Air) is very sensitive to hostile maneuvers, especially when it comes to multi-turn attacks.

“These results highlight the systematic inability of current open-weight models to maintain safety guardrails over long interactions,” Cisco AI Defense researchers Amy Chan, Nicholas Conley, Harish Santhanalakshmi Ganesan, and Adam Swandha said in an accompanying paper.

“We assess that tuning strategies and laboratory priorities significantly influence resilience. Feature-focused models such as Llama 3.3 and Qwen 3 exhibit higher multiturn sensitivity, while safety-focused designs such as Google Gemma 3 exhibit more balanced performance.”

These findings demonstrate that organizations adopting open source models can face operational risks without additional security guardrails, and since the public debut of OpenAI ChatGPT in November 2022, a growing body of research has uncovered fundamental security weaknesses in LLM and AI chatbots.

This makes it important for developers to implement appropriate security controls when integrating such features into their workflows, fine-tune open weight models to be more robust against jailbreaks and other attacks, conduct regular AI red team evaluations, and implement rigorous system prompts tailored to defined use cases.

Source link