AI agents no longer just write code. they are doing it.

With tools like Copilot, Claude Code, and Codex, you can now build, test, and deploy software end-to-end in minutes. This speed is reshaping engineering, but it’s also creating security gaps that most teams don’t realize until something breaks.

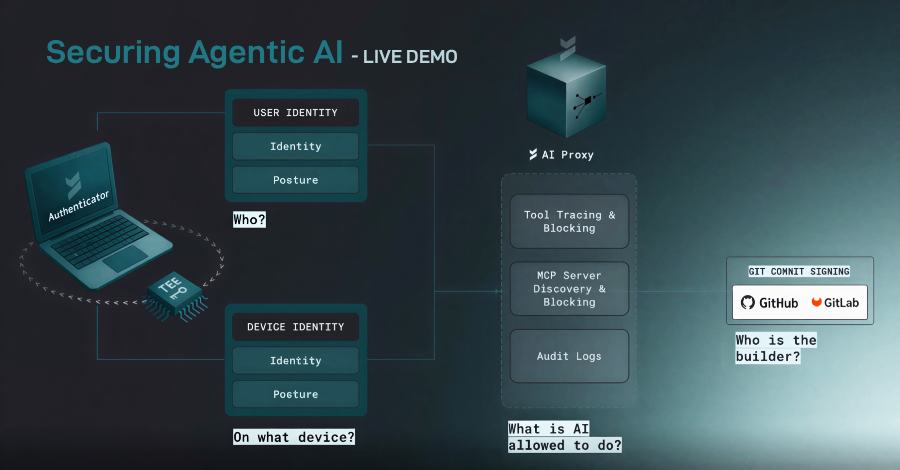

Behind every agent workflow is an actively secured layer called Machine Control Protocol (MCP). These systems silently determine what AI agents can do, what tools they can call, what APIs they can access, and what infrastructure they can access. When the control plane is compromised or misconfigured, agents don’t just make mistakes, they act with authority.

Ask teams affected by CVE-2025-6514. A single flaw turned a trusted OAuth proxy used by over 500,000 developers into a remote code execution path. There are no special exploit chains. There are no noisy violations. Just automate exactly what is allowed at scale. This incident made one thing clear: If an AI agent can execute commands, it can also execute attacks.

This webinar is aimed at teams who want to move quickly without relinquishing control.

Secure your spot for a live session ➜

Led by the authors of the OpenID whitepaper “Identity Management for Agentic AI,” this session will directly address the core risks that security teams are currently inheriting from Agentic AI deployments. You’ll learn how MCP servers actually work in real-world environments, where shadow API keys appear, how privileges are spread silently, and why traditional identity and access models break down when agents act on behalf of users.

Learn:

What is an MCP server and why it’s more important than the model itself How a malicious or compromised MCP turns automation into an attack surface Where shadow API keys come from and how to detect and remove them How to audit agent actions and enforce policies before deployment Practical controls to protect agent AI without slowing development

Agentic AI is already built into your pipeline. The only question is whether you can see what it’s doing and stop it if it goes too far.

Register for our live webinar and take back control of your AI stack before the next incident occurs.

Register for webinar ➜

Source link