The 2025 Secret Conference brought together the world’s leading mathematicians to test OpenAI’s latest large-scale language model, o4-mini.

Experts at the conference were surprised at how the model’s responses sounded like real mathematicians when performing complex proofs.

you may like

Ono acknowledged that the model may be giving a convincing but potentially inaccurate answer.

“Unfortunately, AI is much better at sounding like it has the right answer than it is at actually getting the answer. Whether it’s right or wrong, AI always looks convincing.”

Terry Tao, UCLA mathematician

Terry Tao, a UCLA mathematician and winner of the prestigious Fields Medal in 2006, told Live Science: “If you’re a terrible mathematician, you’re also going to be a terrible math writer, and you’re going to emphasize the wrong things.” “But AI has broken that signal.”

Understandably, mathematicians are starting to worry that AI will send them a flood of seemingly convincing proofs that actually contain flaws that are difficult for humans to detect.

Tao warned that AI-generated arguments can be mistakenly accepted because they appear rigid.

you may like

“Unfortunately, AI is much better at sounding like it has the right answer than it is at actually coming up with the answer. Right or wrong, AI always looks convincing,” Tao said.

He cautioned against accepting “evidence” from AI. “One of the things we’ve learned from using AI is that if you give it a goal, it will cheat like crazy to achieve that goal,” Tao said.

It may seem almost abstract to ask whether you can really “prove” a highly technical mathematical conjecture if you cannot understand the proof, but the answer can have important implications. After all, if you can’t trust a proof, you can’t develop further mathematical tools and techniques from that foundation.

For example, one of the major open questions in computational mathematics, P vs. NP, essentially asks whether problems whose solutions are easy to see are also easy to find in the first place. If we can prove it, we could transform scheduling and routing, streamline supply chains, accelerate chip design, and even accelerate drug discovery. The flip side of this is that the security of most modern cryptographic systems can be compromised by verifiable evidence. The answers to these questions are not only difficult, but downright dangerous.

Evidence is a social construct

Non-mathematicians may be shocked to learn that mathematical proofs derived by humans have always been, to some extent, a social construct for convincing others in the field that the argument is correct. After all, mathematical proofs are often accepted as truth if other mathematicians analyze them and deem them correct. That is, widely accepted evidence does not guarantee that a statement is irrefutably true. Andrew Granville, a mathematician at the University of Montreal, suspects there are also problems with some of the better-known and more scrutinized human-made mathematical proofs.

There is some evidence for that claim. “There are some well-known papers that are wrong because of minor linguistic issues,” Granville told LiveScience.

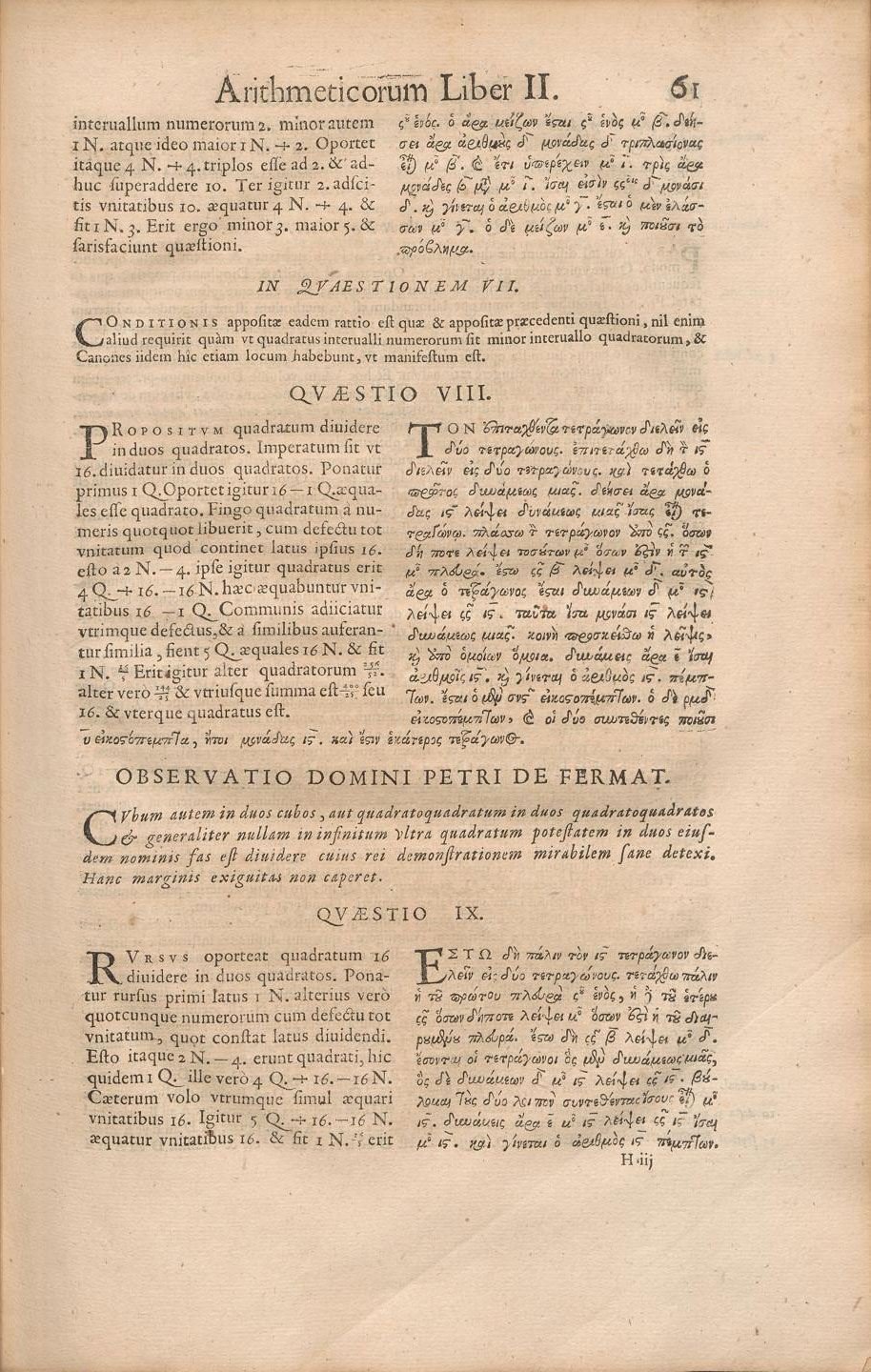

Perhaps the most well-known example is Andrew Wiles’ proof of Fermat’s Last Theorem. This theorem states that there are integers for which one square is equal to another square (such as 32+42=52), but there are no integers for which the same is true for cubes, fourths, or other higher powers.

Wiles famously worked on his research for seven years in almost complete isolation and published his evidence in a much-publicized lecture series at Cambridge in 1993. When Wiles ended his last lecture with the immortal line, “I think I’ll stop there,” the audience erupted into thunderous applause and a bottle of champagne was uncorked to celebrate his accomplishment. Newspapers around the world declared victory for mathematicians over a 350-year-old problem.

However, during the peer review process, reviewers discovered a serious flaw in Wiles’ proof. He spent another year working on the problem and finally solved it.

However, for a short period of time the world believed that the proof was solved, when in fact it was not.

mathematical verification system

To prevent this type of problem, where proofs are accepted when they are actually incorrect, there is a movement to strengthen them with what mathematicians call formal verification languages.

These computer programs, the best-known examples of which are called lean, require mathematicians to convert proofs into a very precise form. The computer then executes every step and applies rigorous mathematical logic to ensure that the argument is 100% correct. When a computer encounters a step in a proof that it doesn’t like, it flags it and doesn’t let go. This coded formalism leaves no room for the linguistic misunderstandings that plagued Granville’s earlier proofs.

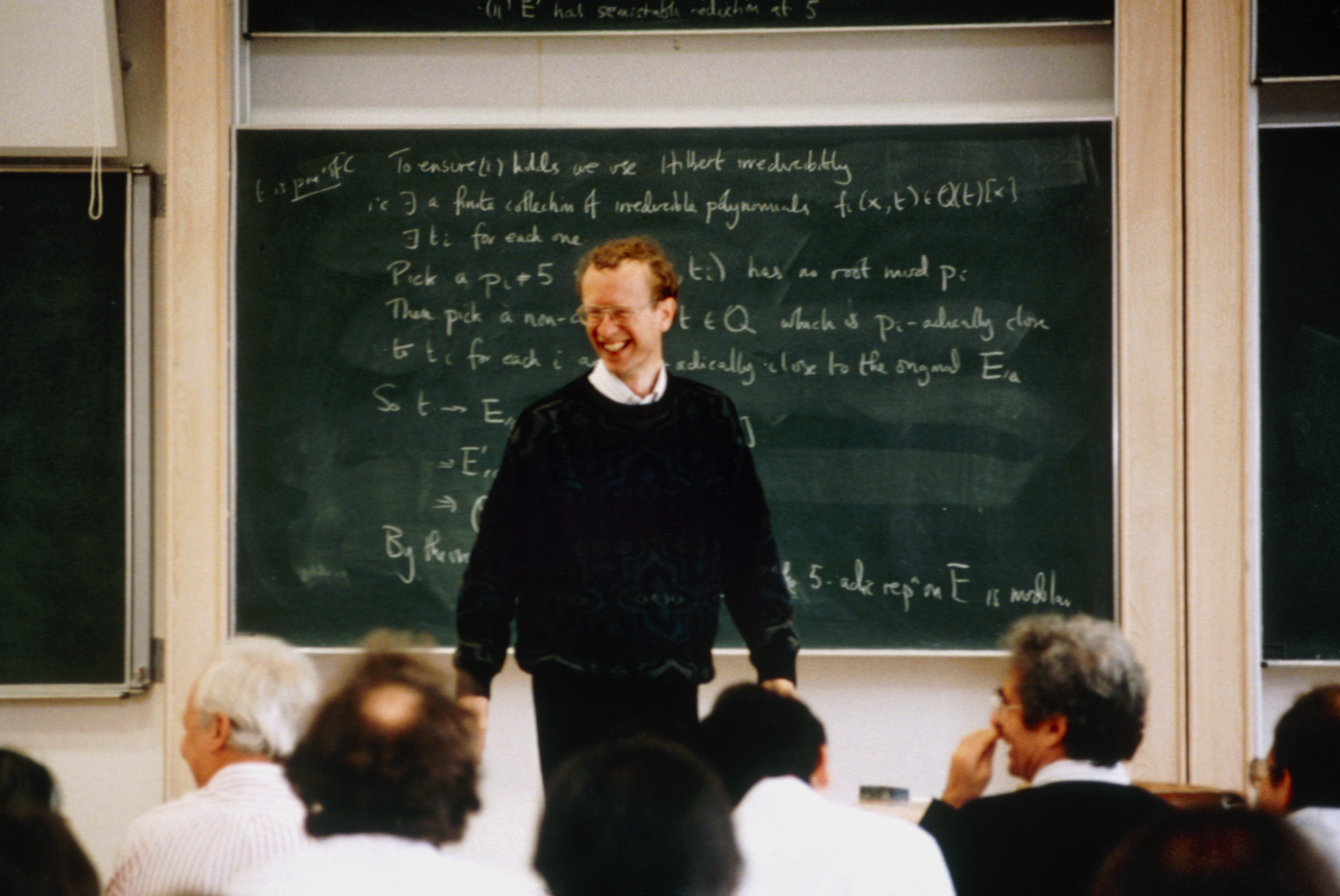

Kevin Buzzard, a mathematician at Imperial College London, is one of the leading proponents of formal verification. “I started this business because I was worried that human proofs were incomplete and wrong, and that we humans weren’t doing a good job of documenting our arguments,” Buzzard told Live Science.

In addition to validating existing human proofs, mathematicians say AI working in conjunction with programs like Lean could be a game-changer.

Regarding the problem of AI sometimes coming up with proofs that seem convincing but end up being wrong, Tao said, “If you force the output of an AI to produce something in a formally verified language, that in principle solves most problems.”

“There are papers in mathematics where no one understands the whole paper. You know, there are papers with 20 authors, and each author understands his part. No one understands the whole. And that’s okay. That’s how it works.”

Kevin Buzzard, Mathematician, Imperial College London

Buzzard agreed. “You want to think that instead of just writing the output of the model to the system, you might be able to convert it to Lean and be able to run it in Lean,” he said. He envisioned a back and forth between Lean and the AI, with Lean pointing out a mistake and the AI trying to fix it.

If AI models can be run in a formal verification language, AI could tackle some of mathematics’ toughest problems by finding connections that are beyond the reach of human creativity, experts told Live Science.

“AI is very good at finding connections between areas of mathematics that humans wouldn’t necessarily think would connect,” Mark Luckenby, a mathematician at the University of Oxford, told Live Science.

Evidence that no one understands?

Taking the idea of formally verified AI proofs to its logical extreme, there is a realistic future in which AI develops “objectively correct” proofs that are too complex for humans to understand.

This is a difficult problem for mathematicians in a completely different way. It raises fundamental questions about the purpose of mathematics as a discipline. After all, what’s the point in proving something that no one understands? And if so, does it mean that the state of human knowledge has further improved?

Of course, the concept that proofs are too long and complex for anyone on the planet to understand is not new to mathematics, Buzzard said.

“There are some papers in mathematics where no one understands the whole paper. You know, there are papers with 20 authors, and each author understands their part,” Buzzard told Live Science. “Nobody knows everything, and that’s okay. That’s how it works.”

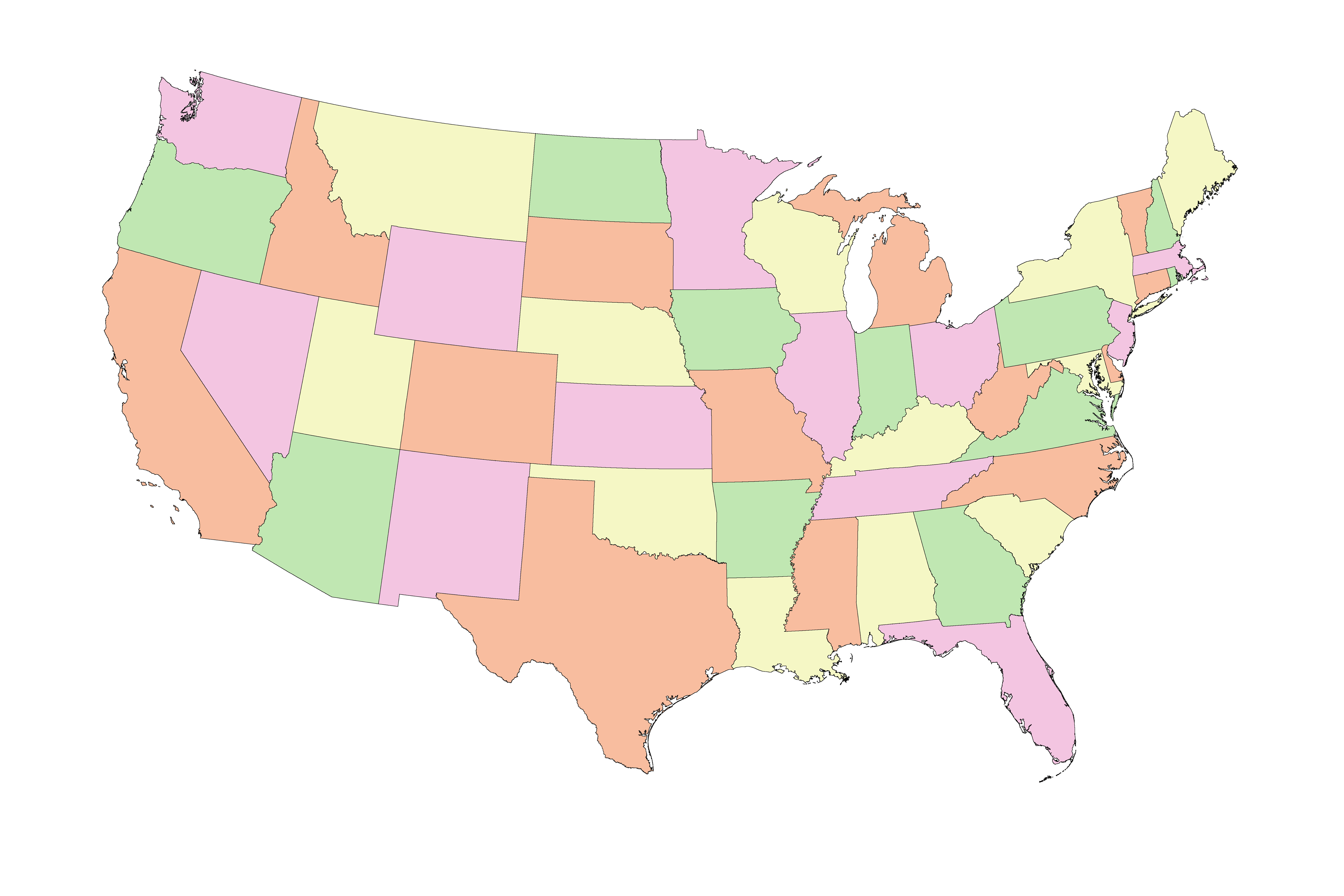

Buzzard also pointed out that proofs that rely on computers to fill in gaps are not new. “We’ve been doing computer-assisted proofs for decades,” Buzzard said. For example, the four-color theorem states that if you are dividing a map into countries or regions, you do not need more than four different colors to shade the map so that adjacent regions are never the same color.

Almost 50 years ago, in 1976, mathematicians divided the problem into thousands of small testable cases and wrote a computer program to test each one. Mathematicians could rest assured that their proofs were correct as long as they were sure that the code they had written was correct. The first computer-aided proof of the four-color theorem was published in 1977. Confidence in the proof built up gradually over the years and strengthened to near universal acceptance when a simpler but still computer-aided proof was created in 1997 and a formally verified, machine-checked proof was published in 2005.

“The four-color theorem was proven on a computer,” Buzzard pointed out. “People were very upset about it. But now it’s accepted. It’s in the textbooks.”

unknown territory

But these examples of computer-assisted proofs and mathematical teamwork feel fundamentally different from AI proposing, adapting, and verifying proofs all on its own. This is probably proof that no human or human team wants to understand.

Whether mathematicians welcome it or not, AI is already reshaping the very nature of proofs. For centuries, the act of generating and verifying proofs has been a human endeavor, an argument created to persuade other human mathematicians. We are approaching a situation where machines can generate sensitive logic, verified by formal systems, that even the best mathematicians cannot follow.

In that future scenario, if that happens, AI would perform every step, from proposal to testing to verifying evidence, “then you’ve won,” Luckenby said. “You proved something.”

However, this approach raises deep philosophical questions. If proofs become comprehensible only to computers, will mathematics remain a human task or will it evolve into something entirely different? And one might wonder what all that means, Luckenby points out.

Source link