Teenagers are trying to figure out where they fit in a world that is changing more rapidly than any previous generation. They have emotional outbursts, are overstimulated, and are chronically online. And now, an AI company has given them a chatbot designed to keep the conversation going. The results were devastating.

One company that understands this impact is Character.AI. The company is an AI role-playing startup facing lawsuits and public backlash after at least two teenagers died by suicide after having lengthy conversations with AI chatbots on its platform. Character.AI is currently making changes to its platform to protect teenagers and children, and these changes may impact the startup’s revenue.

“The first thing we decided to do as Character.AI was to remove the ability for users under 18 to participate in unlimited chats with AI on our platform,” Character.AI CEO Karandeep Anand told TechCrunch.

An open-ended conversation refers to an open-ended interaction that occurs when a user gives a prompt to a chatbot and the chatbot responds with a follow-up question, which experts say is designed to keep users interested. Anand argues that this type of interaction, where AI acts as a conversation partner and friend rather than a creative tool, is not only dangerous for children, but also inconsistent with the company’s vision.

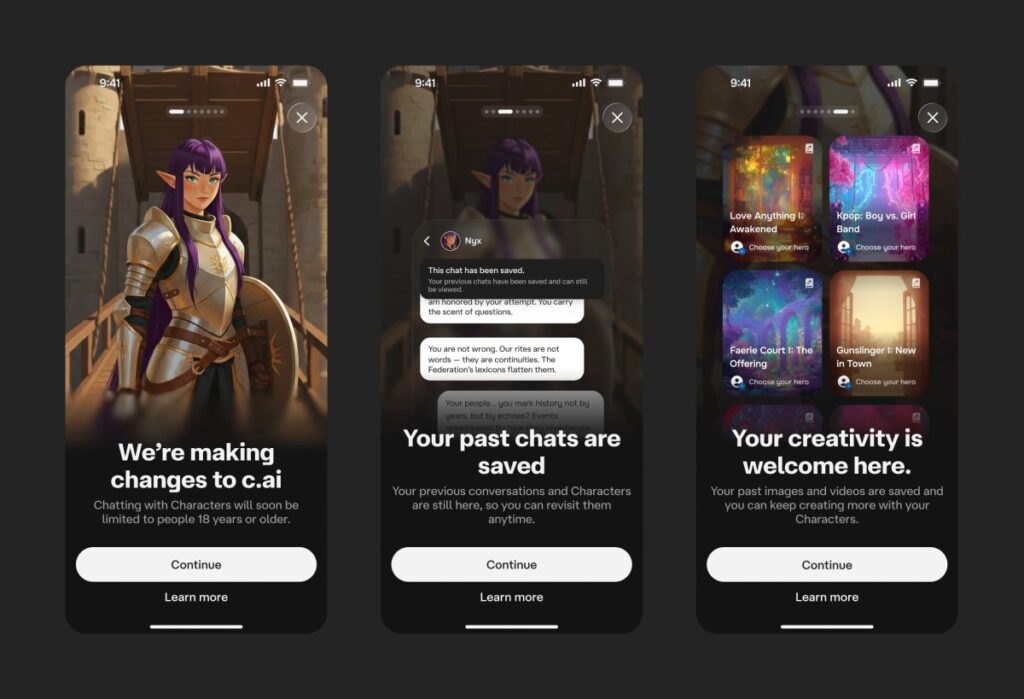

The startup is trying to pivot from an “AI companion” to a “role-playing platform.” Instead of chatting with an AI friend, teens will use prompts to collaboratively build stories and generate visuals. In other words, the goal is to move engagement from conversation to creation.

Character.AI will phase out chatbot access for teens by November 25th, starting with a two-hour limit per day and gradually reducing it until it reaches zero. To ensure this ban is enforced for users under 18, the platform deploys in-house age verification tools that analyze user behavior, as well as third-party tools like Persona. If those tools fail, Character.AI uses facial recognition and ID checks to verify age, Anand said.

The move follows other teen protections that Character.AI has implemented, including the introduction of parental insight tools, filtered characters, limited romantic conversations, and time-over notifications. Anand told TechCrunch that the company has lost much of its under-18 user base due to these changes, and he expects these new changes to be similarly unpopular.

tech crunch event

san francisco

|

October 27-29, 2025

“There’s no doubt that many teenage users will probably be disappointed, so we expect to see more churn in the future,” Anand said. “It’s hard to speculate, but will they all leave completely, or will some of our users migrate to the new experience we’ve been building for the past almost seven months?”

Character.AI recently released several new entertainment-focused features as part of its efforts to transform its platform from a chat-centric app to a “full-fledged content-driven social platform.”

In June, Character.AI launched AvatarFX, a video generation model that turns images into animated videos. Scenes, interactive preset storylines that allow users to step into the story with their favorite characters. Streams are a feature that allows dynamic interaction between any two characters. In August, Character.AI launched Community Feed, a social feed that allows users to share characters, scenes, videos, and other content they create on the platform.

Character.AI apologized for the changes in a statement to users under 18.

“We know that most of you are using Character.AI to enhance your creativity within our content rules,” the statement reads. “We are not taking this step to remove open-ended character chat lightly, but we believe this is the right action given the questions being raised about how teens can and should interact with this new technology.”

“We have no intention of shutting down apps for those under 18,” Anand said. “The reason we’re only shutting down open-ended chat for under-18s is because we want under-18s to migrate to these other experiences and to see those experiences improve over time. So we’re focusing on AI games, AI short videos, AI storytelling in general. That’s the big bet we’re making to win back under-18s if they leave.”

Anand acknowledged that some teens may flock to other AI platforms, such as OpenAI, which allow them to have free conversations with chatbots. OpenAI also recently came under fire after a teenager committed suicide after having a long conversation with ChatGPT.

“I really hope that we take the lead and set an industry standard that open-ended chat is probably not an avenue or product for anyone under 18,” Anand said. “I think the trade-off is the right choice for us. I have a six-year-old child and I want to make sure she grows up in a very safe environment with AI in a responsible way.”

Character.AI makes these decisions before regulators force them to do so. On Tuesday, Sen. Josh Hawley (R-MO) and Sen. Richard Blumenthal (D-CT) announced they would introduce legislation to ban the use of AI chatbot companions by minors, following complaints from parents that AI chatbot companion products have led their children to engage in sexual conversations, self-harm, and suicide. Earlier this month, California became the first state to regulate AI companion chatbots by making companies liable if their chatbots fail to meet the law’s safety standards.

In addition to these changes on the platform, Character.AI also announced the creation and funding of the AI Safety Lab, an independent nonprofit organization dedicated to innovating safety adjustments for future AI entertainment features.

“There’s a lot of work being done in the industry around coding and development and other use cases,” Anand says. “I don’t think enough work has been done yet when it comes to agent AI to enhance entertainment, and safety is critical to that.”

Source link