O3-mini, the latest model of Openai, was told that even if the user was promoted in English, it was discovered that it had generated a response in Chinese, and then raised his eyebrows. This unexpected operation emphasized by X (previous Twitter) user Vikhyat Rana has promoted the speculation that Openai may have borrowed from Deepseek, an open source AI project specializing in Chinese processing. 。

In less than a week, Openai claimed that Deepseek has copied its own AI model to train an open source system using technology known as “distillation”, and in less than a week, accusations have been raised in less than a week. 。 Computing resource.

Openai accused of copying the code of DeepSeek

In response to RANA posts, X users named DaiWw argue that Openai’s latest O3-mini model is inferred in Chinese, and Openai has no appropriate sophistication before release. It suggests that you may have used code or data.

O3-mini responded to English prompts in Chinese

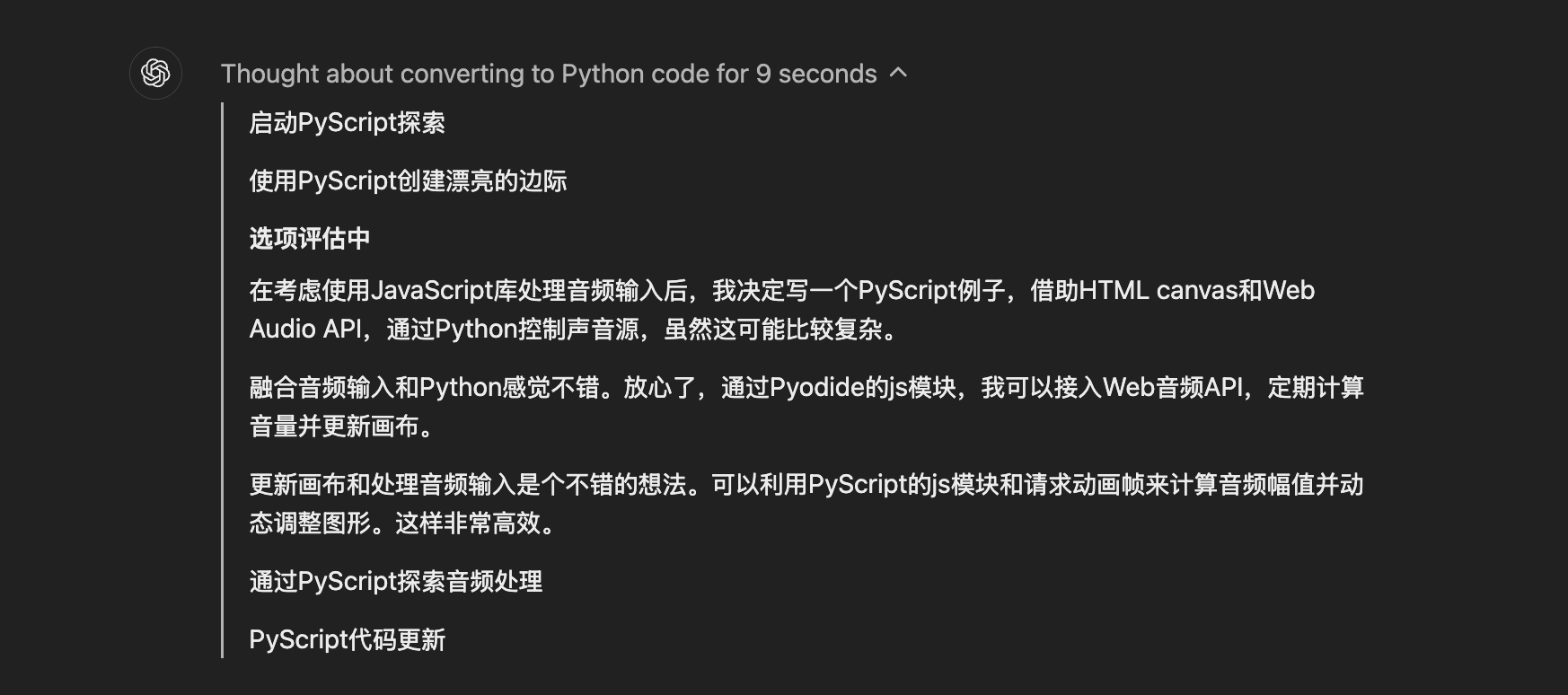

The problem emerged when Lana shared English query screenshots and brought a Chinese response. This answer includes a detailed technical explanation on JavaScript processing of Pyscript and Audio API, questioning whether Openai integrates or used data from existing Chinese datasets without completely reviewing. Some people do.

In X’s post, RANA asked, “Why O3-mini is infining in Chinese?”

“I thought about converting to Python code for 9 seconds

Pyscript search

Pyscript Fish -building cloth -like frame

In the middle

Use of consideration JavaScript 处 处 处 处 我 我 我 我 我 我 我 我 我 我 我 我

python pyodide

Update Pissing Waren Waro Ryon Introduction, Pyscript -like JS Shaku Wakoi Painting Picture Conditions

Why O3-mini is infining in Chinese pic.twitter.com/2z8mncprby

-VIKHYAT RANA (@the_vikhyat) February 1, 2025

The following is DaiWW’s response to RANA posts.

“The latest Openai O3-Mini is inferred in Chinese. Openai seems to have copied DeepSeek’s open source code and data and released O3-mini without appropriate editing.”

The latest Openai O3-Mini is a reason in Chinese, and Openai seems to have released O3-Mini without carefully editing DeepSeek open source code/data. pic.twitter.com/tkootanhoq

-DaiWW (@beijingdai) February 3, 2025

Openai’s silence and meaning

Openai, on the other hand, has not yet dealt with the claim. This situation emphasizes more public concerns on the transparency of AI model training, ethical use of open source data, and the risk of unexpected behavior in AI output. If Openai procures data from DeepSeek, its own AI company may change the way to interact with the open source community and raise questions about data set audits.

Open source code accusation of misuse

Some critics believe Openai may have incorporated Deepseek’s published datasets without credit. Deepseek is an open source AI Initiative, which specializes in Chinese models, and the sudden proficiency of O3-mini in this field has promoted the origin of its origin. If Openai uses DeepSeek’s job, it may cause ethical and intellectual property concerns in the AI research community.

Greater questions during play

This controversy will be added to AI ethics, data procurement, and corporate responsibilities in AI development. Companies like Openai continue to push the boundary of the generated AI, but there are questions about the responsibility and transparency.

As the AI community looks at Openai’s response, the important problem is whether the O3-mini Chinese reasoning is an unintended result of the training, or a sign of deeper issues on AI data ethics and intellectual property. Whether it was. The next few days can bring more scrutiny, and Openai may need to clarify data sources to maintain trust. For now, the situation remains unresolved.

Despite the ongoing claims, Altman acknowledged the DeepSeek model as a powerful competitor and pointed out that the need for more computing skills to maintain the edge. In X’s post, he explained that DeepSeek’s R1 model was “especially impressive about what he could offer for prices.”

The launch of Deepseek’s highly cost -efficient V3 model was called the moment of “AI SPUTNIK”, and it rattles industry players around the world. According to reports, Deepseek AI’s groundbreaking models were developed at less than $ 6 million. This is an uneasy person for US high -tech companies who have invested billions in similar technology.

According to multiple reports, Deepseek V3 has exceeded the main models such as LLAMA 3.1 and GPT-4O on major benchmarks, including CodeForces competitive coding issues. This project was completed with a budget of only $ 5.5 million. This is completely contrasting with hundreds of millions of rivals spent. This breakthrough is challenging the concept that cutting -edge AI development requires enormous financial investment.