Google has launched the latest open source AI model, Gemma 3, with the aim of making AI development more efficient and accessible. The model comes in four sizes, 1 billion, 4 billion, 12 billion, and 27 billion parameters, and is designed to run on a single GPU or TPU. This makes it practical on a wide range of devices, from laptops to powerful workstations.

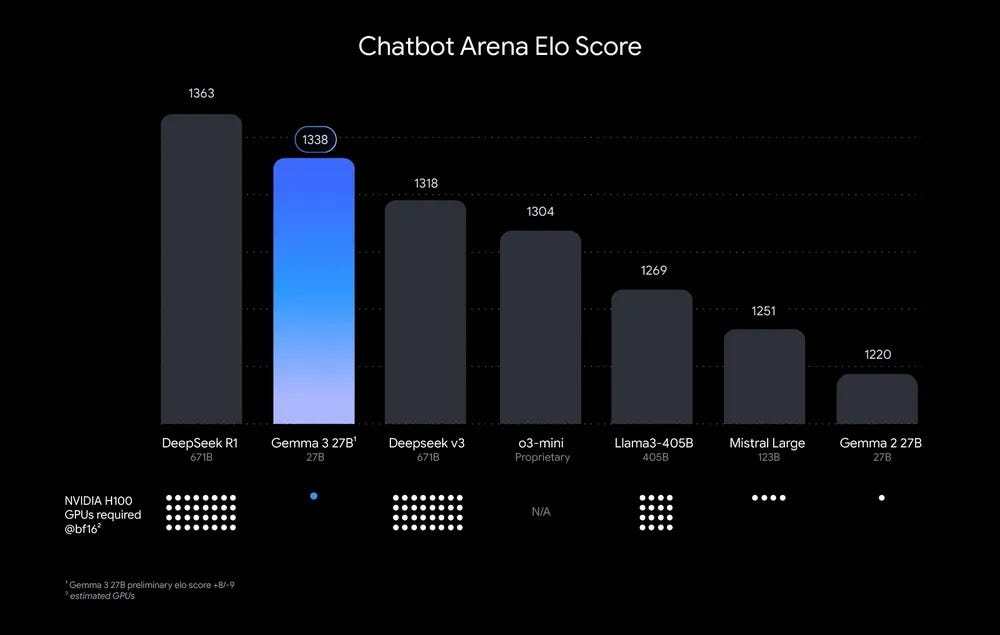

Alphabet CEO Sundar Pichai announced the launch on X, saying, “The Gemma 3 is here! Our new open model is extremely efficient. The largest 27B model runs on one H100 GPU. To get similar performance from the other models, you need at least 10 times more computing.”

Gemma 3 is here! Our new open models are extremely efficient. The largest 27B models run on one H100 GPU. To get similar performance from other models, you need at least 10x more computing ⬇️pic.twitter.com/4fkujoroq4

– Sundar Pichai (@sundarpichai) March 12, 2025

GEMMA 3: The most efficient open source AI model with single GPU efficiency and multimodal power

In a blog post, Google described the Gemma 3 as a collection of advanced, lightweight, open models built on the same research and technology behind the Gemini 2.0 model. The search giant is the most portable and responsibly developed open model of the Gemma 3 to date, with the Gemma 3 setting a new standard for accessibility and performance.

This chart ranks AI models by Chatbot Arena ELO score. A high score (highest number) indicates the size of the user’s preference. The dots indicate estimated NVIDIA H100 GPU requirements. The Gemma 3 27B is highly ranked and requires only a single GPU, despite the other GPUs needing up to 32.

Why Gemma 3 stands out

Flexible Model Size: Whether you’re working on a mobile app or enterprise software, there are three Gemma models that suit your needs. Multilingual Support: Gemma 3 handles over 140 languages, with 35 of which providing immediate support. This broadens the possibilities for global projects. Works beyond text, images and videos. These models are not limited to text only. It can process images and short videos. This is useful for building more interactive applications. Extended Context Window: The ability to process up to 128,000 tokens allows Gemma 3 to take on complex tasks such as summarizing large documents and analyzing extensive datasets. Strong Performance: The 27B model scored 1338 at the Lmarena, surpassing its larger competitors. Google calls it “the world’s best single accelerator model.” Privacy and Local Processing: By enabling Device On-Device AI processing, Gemma 3 helps protect user data and reduces dependency on cloud services. Auto-task processing: Features such as feature calls and structured output simplify the creation of dynamic and responsive applications.

Keep AI safe

Google has also introduced ShieldGemma 2, an image safety classifier that divides content into three categories: dangerous content, sexually explicit material, and violence. This is a step towards helping developers keep their platform safer for users.

Easy access for developers

Developers can start using Gemma 3 on platforms such as Google AI Studio, Hugging Face, and Kaggle. The model works smoothly with popular tools and frameworks such as Face Trans, Orama, Jax, Keras, Pitorch, Google AI Edge, and offers ample flexibility for a variety of projects.

Why is it important?

Gemma 3 isn’t just about making AI faster or smarter. It’s about lowering the barriers so that more people can build useful AI applications. Whether you’re developing it for mobile or desktop, Gemma 3 brings new possibilities to your table.

For those interested in technical details, Google shares a complete report outlining the architecture and benchmarks of Gemma 3.