Professor Jacek Kitowski from AGH University of Krakow shares an update on the ALICE experiment upgrade, reflects on the past successes of the experiment, and details hopes for its future.

Collaboration between CERN and the Krakow scientific community has been a decades-long tradition in high-energy physics, electronics, instrument design, and supporting activities. AGH University of Krakow, one of the largest technical universities in Poland, has been actively involved in this co-operation, contributing to the development of experiments such as ATLAS, CMS, and LHCb. In 2017, the collaboration expanded to include the ALICE experiment, with AGH initially joining as an associate member and becoming a full member in 2020. Since then, the university has played a significant role in advancing electronics and software engineering, along with applying artificial intelligence (AI) and machine learning to data acquisition, analysis, and simulations.

Development of a new readout electronics for FIT detector

From the very beginning, one of the important ALICE projects, in which scientists and specialists from AGH participated, was the FIT (Fast Interaction Trigger) Project. Electronics engineers provided support for FPGA firmware development for the FIT subdetector readout electronics. During the COVID-19 period, collaboration and the AGH group’s ability to quickly absorb project details were particularly challenging. The situation was further complicated due to geopolitical issues, which strongly affected the FIT collaboration. Despite limited experience in FIT, the AGH electronics team undertook further development and improvement of the front-end electronics (FEE). Later, other Polish scientists, especially from the Warsaw University of Technology (WUT), joined to contribute additional expertise necessary for the FIT collaboration.

Facing new challenges and the high entry threshold required local development of laboratory and equipment resources. Thanks to the support of the Ministry of Science and Higher Education, the on-site development of electronics at AGH and WUT allowed for the involvement of enthusiastic students and the progress of multi-variant electronic systems, which was preceded by a detailed identification of existing electronic systems. The development is towards new FIT electronics for ALICE Run4 considering that the new FEE must improve measurement precision and flexibility of the existing solution to allow its use for the future Forward Detector (FD) in the ALICE 3 experiment, as FD is an immediate successor of the FIT detector.

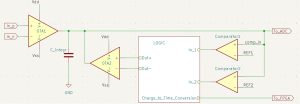

In 2024, a dedicated FIT laboratory was established at AGH. The FIT laboratory includes multiple integrated components that together ensure reliable, high-speed triggering and data acquisition. FIT’s custom electronics are split into two main modules, namely processing modules (PMs) and a trigger control module (TCM). PM handles 12 analogue input lines from photo multipliers (PMTs), performs constant fraction discrimination (CFD) of event pulses, converts time and amplitude (TDC/ADC) to digital representation, and performs trigger pre-processing with FPGAs. TCM synchronises the system with the LHC master clock and generates trigger signals. Both PMs and TCM are interfaced with the Common Readout Unit (on First-Level Processor server) via optical GBT links. Also, the original electronics uses IPBus protocol for control and monitoring via a detector control system (DCS). All the components had to be installed and launched in the laboratory together with the necessary computer servers (FPL, DCS) and laser subsystem for parametrisation and monitoring.

Instant analogue front-end upgrade

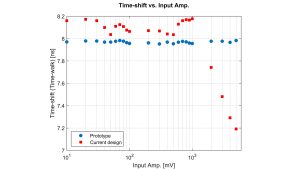

Despite logistical challenges and time constraints, AGH specialists were able to introduce an immediate improvement to the existing FEE CFD. Since FIT consists of three slightly different subdetectors – FT0 (Fast Cherenkov arrays with MCP-PMT photomultipliers), FV0 (A large plastic scintillator ring), and FDD (forward diffractive detector, based on scintillator tiles) – the electronics shared by all detectors was not optimal. The AGH team redesigned the analogue front end and CFD for FV0 to allow input pulses with an extended range up to 5 V. It is worth noting that additional effort and engineering skills were necessary to maintain existing electronics time jitter at the acceptable level of 20 ps (for 100 mV pulse amplitude). The plan is to implement these improvements as early as the 2026 data-taking period.

Upgrades for ALICE Run4

More significant updates are being prepared for Run4 (2030–2034). A new charge integrator architecture is being proposed, based not on traditional operational amplifiers, but on operational transconductance amplifiers (OTAs). The new design features an ultra-fast, dual-slope OTA-based integrator with two readout paths: one leading to an Analog-to-Digital Converter (ADC) and the other to a Charge-to-Time Converter (implemented in an FPGA).

Although this solution is not common in discrete electronics, it may further alleviate the current issue with the high bandwidth of pulses from Cherenkov arrays and the parasitic charge introduced by transistors in traditional designs. Several prototypes of OTA-based integrators are currently being tested, and the preliminary results are very promising.

Another development for Run4 is a new FPGA firmware for TCM, which enables the FIT team at CERN to make the electronics compatible with ALICE DCS requirements using GBT links for detector control and monitoring.

Long-term plans for ALICE3

As for the long-term plans, the AGH electronics team aims to develop readout electronics for the planned ALICE 3 Forward Detector. One of the current limitations is the long distance between the detector PMTs and the ADC and TDC modules. Now, detectors are connected to FEE with long coaxial cables, which, despite their high quality, introduce some pulse shape distortion and limit further improvements in time measurements. AGH aims for its future electronics to be radiation tolerant to allow modules to be installed closer to the detectors and enabling more precise measurements. This goal is to be achieved by means of ASICs or radiation-hardened FPGAs.

Data science research focuses on providing machine-learning-based surrogates for ALICE detector simulations. Their work addresses the growing demand for fast, high-fidelity simulations – a challenge that is rapidly growing as ALICE’s data requirements outpace the capabilities of traditional, resource-intensive simulation methods.

The group’s focus is on Zero Degree Calorimeter (ZDC) and Forward Hadronic Calorimeter (FoCal-H) simulations. Both detectors have a similar ‘spaghetti-type’ structure, and their outputs can be expressed on two-dimensional planes and interpreted as images. However, simulating these responses with conventional techniques is both time-consuming and computationally demanding. To overcome these limitations, the team is developing ML-based surrogate models that can emulate detector responses with remarkable speed and accuracy. They employ a suite of state-of-the-art generative models, including generative adversarial networks (GANs), variational autoencoders (VAEs), diffusion models, and normalising flows. These models are conditioned on the parameters of particles produced immediately after beam collisions, ensuring that the simulated outputs not only reproduce the overall distributions but also retain critical correlations with the underlying particle features.

The key insight from the group’s research is the trade-off between simulation speed and output quality. The simpler models, such as GANs and VAEs, deliver rapid results but may compromise on the fidelity of the generated samples, whereas more complex diffusion models or normalising flows capture complex dependencies more accurately, albeit with increased computational cost. The choice of model is thus tailored to the specific analysis needs, balancing speed against the required level of detail in the simulated data.

The group’s efforts extend well beyond simply benchmarking established architectures. Their workflow encompasses advanced preprocessing to maximise the utility of available data, robust model training strategies, including transfer learning and fine-tuning, and post-processing techniques to further refine simulation outputs. Moreover, the research also verifies the use of ensemble learning, where multiple models are combined to enhance prediction accuracy and robustness. Each stage presents unique challenges, demanding innovative solutions and a deep understanding of both physics and machine learning.

In parallel with model-driven generative approaches, the team explores symbolic and pattern-based alternatives to augment or replace simulation pipelines. One such direction involves the Deep Associative Closed-Pattern Structure (DeepACPS), a recently proposed framework designed for unsupervised discovery of high-cardinality closed patterns and their associations. Unlike conventional methods that focus solely on simulation fidelity or speed, DeepACPS builds sparse, neural-like structures from detected patterns and automatically uncovers complex interdependencies – analogous to synaptic connections in the human brain. This approach offers an interpretable and hyperparameter-free architecture that can learn directly from detector outputs and generalise across configurations, providing a compelling complement to traditional ML surrogates in cases where explainability or symbolic reasoning is essential.

Integrating DeepACPS into the simulation workflow may also open new avenues for explainable surrogate modelling, especially in domains where preserving inter-feature relationships is critical. Its deterministic and scalable construction algorithm operates with linear complexity and supports continual learning, making it particularly attractive for online detector simulations or adaptive calibration routines. By modelling data as layered combinations of feature patterns and tracking their associative structure over time, DeepACPS enables not only rapid inference but also post-hoc reasoning over simulation decisions – a capability rarely available in generative deep learning models. This makes it a strong candidate for future hybrid pipelines where symbolic models enhance or validate outputs of neural generative surrogates.

The ultimate ambition is to deliver an ML-based alternative to the current simulation engines at the ALICE experiment. While this vision is still in progress, each new model brings the team closer to realising fast, scalable, and high-quality detector simulations. The group is confident that AI-driven methods will soon become an integral part of the ALICE simulation toolkit, enabling new discoveries in high-energy physics.

Enhancing job scheduling in the ALICE computing grid

The ALICE experiment at CERN relies on a distributed Grid infrastructure to handle its massive computational needs. This system processes around 500,000 jobs daily across about 60 computing clusters worldwide, with tens of thousands of jobs running in parallel. On average, a job lasts four hours, though durations vary widely depending on the task. Monte Carlo simulations, which account for half the resources, are heavily CPU-intensive and can run for up to 24 hours, influenced by CPU model and machine load. Other workflows, like data reconstruction and organised analysis, demand more input/output (IO) or memory, with run times affected by data volume, storage speed, and network connections between nodes.

The current scheduling approach has limitations. Jobs request overly long execution times to guarantee completion even on the slowest hardware. This static allocation, set by operators, leads to inefficiencies: jobs that could finish in remaining time slots on a node are delayed until a longer slot opens, while short timeslots remain unallocated. With a tenfold performance difference between the Grid’s slowest and fastest CPUs this issue is amplified. Jobs rarely fail due to timeouts, but the system wastes resources by not managing job scheduling efficiently.

To address this, a new method has been developed. It uses machine learning to predict a job’s wall execution time based on input parameters and the parameters of the target host. The model is trained on historical data on similar jobs. The model automatically weighs whether a job is CPU-bound, IO-bound (involving local disks, external storage, or networks), or a mix. Accurate predictions allow the scheduler to adjust job requirements and allocation on shorter time slots. Ultimately, this enables more efficient use of resources, running more jobs with the same infrastructure.

This work develops a neural network to estimate job run times from details about the job and the machine. To ensure predictions are fast enough for the Grid’s busy environment, the model uses techniques like quantisation and sparsity to streamline calculations. These optimisations speed up the process significantly while keeping accuracy high.

In the end, this creates a flexible framework for using AI in large computing systems. It helps balance speed and reliability, offering a useful approach for other scientific projects that need efficient resource management to push research forward.

Acknowledgement

The collaboration between AGH and the ALICE experiment is supported by 2022/WK/01 and 2023/WK/07 grants from the Ministry of Science and Higher Education in Poland.

Please note, this article will also appear in the 23rd edition of our quarterly publication.

Source link