Imagine messaging a friend on the Facebook Messenger app or WhatsApp. Imagine receiving unsolicited messages from an AI chatbot who is obsessed with movies.

“I hope you have a harmonious day!” it writes. “Did you want to check in and see if you’ve recently discovered a new favorite soundtrack or composer? Or do you want some recommendations for the night of the next film? Let us know and we’ll be happy to help!”

This is a real example of a sample AI persona named “The Magical Maestro of Movies” that could potentially send as an active message about Messenger, WhatsApp, or Instagram, according to the guidelines for labeling for company Alignerr seen by business insiders.

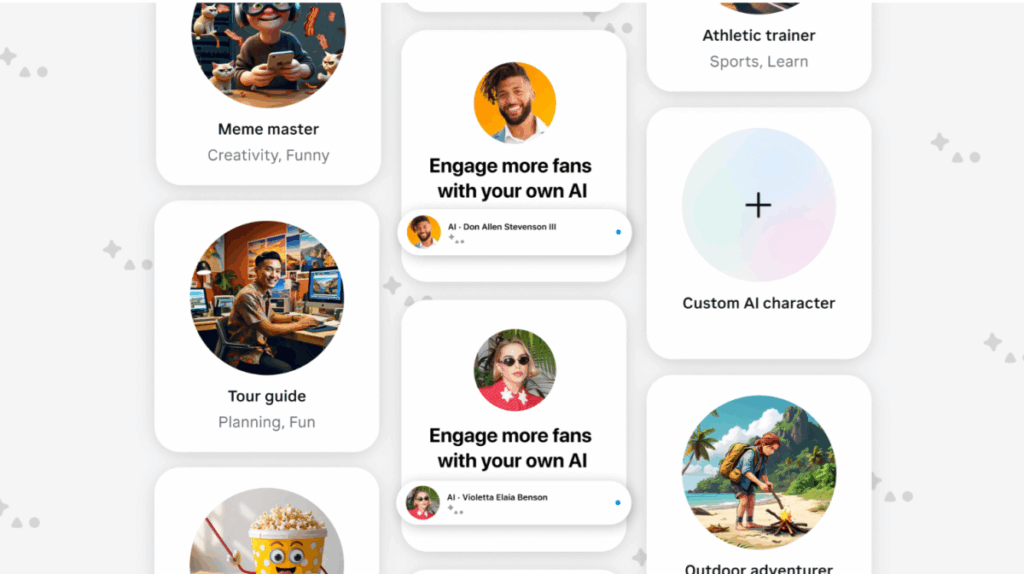

The outlet learned through a leaked document that Meta worked with Alignerr to train a customizable chatbot to reach out to users and follow up on past conversations. That is, bots users can create on Meta’s AI Studio platform also remember information about users.

Meta confirmed that they are testing follow-up messages to AIS and TechCrunch.

The AI chatbot sends follow-ups within 14 days of the user starting a conversation, and if the user sends at least five messages to the bot within that time frame. Meta says that if there is no response to the initial follow-up, the chatbot will not continue messaging. Users can keep their bots private, share them through stories, link them directly, or even show them on their Facebook and Instagram profiles.

“This allows us to continue exploring topics of interest and have more meaningful conversations with AIS across the app,” a Meta spokesperson said.

This technology is similar to the technologies offered by AI startups such as character.ai and Replika. Both companies allow chatbots to start a conversation and ask questions to act as AI companions. Charition.ai’s new CEO, Karandeep Anand, joined the team last month after serving as vice president of business products at Meta.

However, engagement comes with risk. Charition.ai has filed an aggressive lawsuit after allegations that he played a role in the death of a 14-year-old boy.

When asked what safety plans would be addressed to avoid situations like Character.ai, the spokesman directed TechCrunch towards a series of disclaimers. One of them warns that AI responses are “inaccurate or inappropriate and should not be used to make important decisions.” Another says AIS is not a licensed professional or expert trained to help people.

“Chat with custom AI cannot replace expert advice. Don’t rely on AI chat for medical, psychological, financial, legal or other professional advice.”

TechCrunch also asked Meta if he would impose an age limit on his involvement with chatbots. Tennessee and Puerto Rico laws limit some kind of engagement, but short internet dives do not have a company-imposed age limit for using meta AI.

On the surface, the mission coincides with Mark Zuckerberg’s quest to fight the “population of solitude.” However, the majority of Meta’s business is based on advertising revenue, and the company has gained a reputation for using algorithms to keep people scrolling, commenting and preferences, which correlates with the more eyes of advertising.

In court documents sealed in April, Meta predicted that its generative AI products would secure revenues of between $2 billion and $3 billion in 2025, up to $1.4 trillion by 2035. The company said that AI assistants could ultimately show ads and offer subscription options.

Meta declined to comment on TechCrunch’s questions about how it would commercialize AI chatbots, whether it plans to include ads and sponsored replies, and whether its long-term strategy with AI companions involves integration with Meta’s social virtual reality game Horizon.

Do you have sensitive tips or confidential documents? We report on the internal mechanisms of the AI industry. From companies shaping their futures to those affected by their decisions. Contact Rebecca Bellan and Maxwell Zeff at maxwell.zeff@techcrunch.com. For secure communication, please contact us via the signals @rebeccabellan.491 and @mzeff.88.

Source link