Nvidia announced a wave of updates at Computex 2025 on Monday. This is headlined by the launch of a new silicon “NVLink Fusion,” which opens the AI ecosystem to non-nvidia chips. The move shows strategic change as it strengthens the grip of AI infrastructure and continues to be at the heart of the future of artificial intelligence.

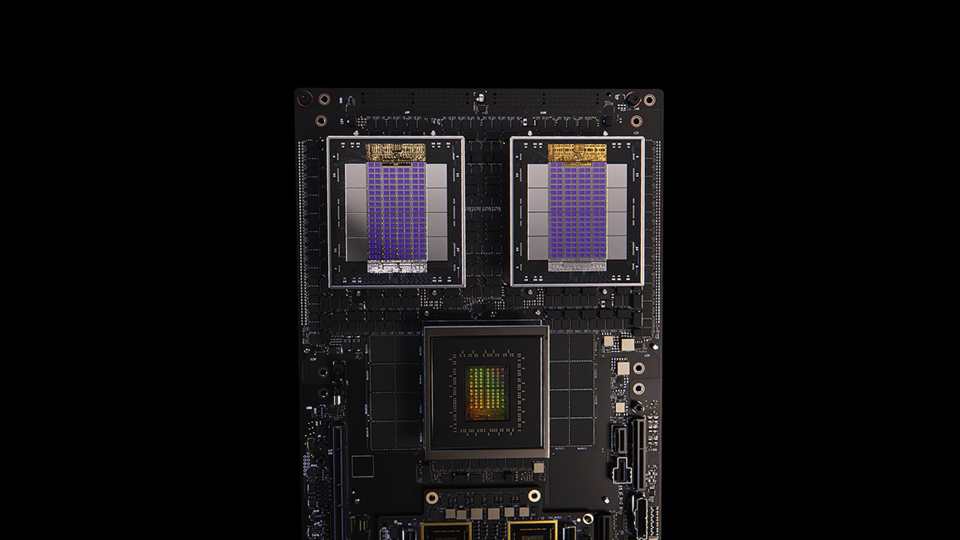

“NVLink Fusion is a new silicon that can build semi-custom AI infrastructures with the world’s most advanced and widely adopted computing fabric, NVIDIA NVLINK™, and a vast ecosystem of partners built,” Nvidia said in a news release.

With NVLINK Fusion, NVIDIA has launched NVLink technology, which was once closed, allowing partners and customers to integrate non-NVIDIA CPUs and GPUs into systems using NVIDIA hardware. Until now, NVLink was reserved exclusively for Nvidia’s own chips.

“The NV Link fusion is to enable you to build not only semi-custom chips, but semi-custom AI infrastructure,” CEO Jensen Fan said on the stage at Computex 2025 in Taiwan, Asia’s largest electronics conference.

Nvidia announces nvlink Fusion to bring third-party chips into the AI ecosystem

According to NVIDIA, NVLink Fusion offers cloud providers an easy way to scale their AI infrastructure across millions of GPUs, regardless of the underlying ASIC, by leveraging NVIDIA’s rack-scale systems and end-to-end networking stacks. This includes throughput up to 800GB/s with NVIDIA ConnectX-8 Supernics, Spectrum-X Ethernet, and Quantum-X800 Infiniband switches.

This update will give hardware manufacturers more flexibility in how they build AI data centers. The same NVLink interface can now be used to connect NVIDIA processors to third-party CPUs and ASICS (application-specific integrated circuits). This means that Nvidia doesn’t need to control the entire stack to be in the room. You need to make sure that the tip is still invited.

“A structural shift is ongoing. For the first time in decades, data centers have to be essentially searched. AI is merging into all computing platforms,” says Jensen Huang, founder and CEO of Nvidia. “NVLink Fusion opens NVIDIA’s AI platform and a rich ecosystem to build a specialized AI infrastructure for our partners.”

Nvlink Fusion has already received support from well-known chipmaking partners such as MediaTek, Marvell, Alchip, Astera Labs, Synopsys, and Cadence. Customers such as Fujitsu and Qualcomm Technologies will now be able to combine their own CPUs with NVIDIA GPUs within their AI infrastructure, opening new doors for hybrid setups.

Washington-based technology analyst Ray Wang told CNBC that Nvidia is giving shots in the cornering portion of the AI data center market, previously dominated by ASIC.

That flexibility can be more important than ever. Nvidia has established a strong position for general purpose AI training on GPUs, but the largest customers, including Google, Microsoft, and Amazon, have built their own specialized chips. NVLink Fusion plays Nvidia even on systems where the CPU is not the default.

“NVLink Fusion integrates Nvidia as the heart of our next-generation AI factory, even if these systems are not fully built with Nvidia chips,” says Wang.

If the program is widely adopted, Nvidia can draw deeper into collaboration with custom chip makers and help them stay central as AI infrastructure evolves.

That said, there are a few trade-offs. Having your customers connect your CPU to reduce the demand for Nvidia’s unique processor line. However, analysts like Rolf Bulk of New Street Research told CNBC that this reverse outweighed the risk. Flexibility helps Nvidia’s GPU stack compete more effectively with new system architectures that could reduce leads.

So far, top NVIDIA competitors BORROADCOM, AMD and Intel have gone missing from the NVLink Fusion Club.

Nvidia announces new technologies to keep it at the heart of AI development

Huang also shared an update to its Grace Blackwell Systems, confirming that it will debut in the third quarter of this year, with high overall performance for its AI workloads.

Another big, obvious public disclosure: NVIDIA DGX Cloud Lepton is a new AI platform that provides access to thousands of GPUs through a global network of cloud providers. According to the company, Lepton aims to make developers’ high-performance computing resources easier for developers to access, and is aiming to connect directly to cloud GPU capacity through a unified platform.

To conclude, Nvidia announced a new Taiwanese office and partnership with Foxconn, building the AI SuperComputer project. “We are pleased to partner with Foxconn and Taiwan to help build Taiwan’s AI infrastructure and help TSMC and other leading companies accelerate innovation in the age of AI and robotics,” Huang said.

Nvidia has not loosened its grip in the AI space. You’re smarter about where it fits in the puzzle. NVLink Fusion shows that even if a competitor becomes custom, Nvidia still plans to be the backbone that brings it together.

🚀Want to share the story?

Submit your stories to TechStartUps.com in front of thousands of founders, investors, PE companies, tech executives, decision makers and tech leaders.

Please attract attention

Source link