Cybersecurity researchers have uncovered a new vulnerability affecting OpenAI’s ChatGPT artificial intelligence (AI) chatbot. This vulnerability could be exploited by an attacker to steal personal information from a user’s memory or chat history without the user’s knowledge.

According to Tenable, seven vulnerabilities and attack techniques were discovered in OpenAI’s GPT-4o and GPT-5 models. OpenAI has since addressed some of them.

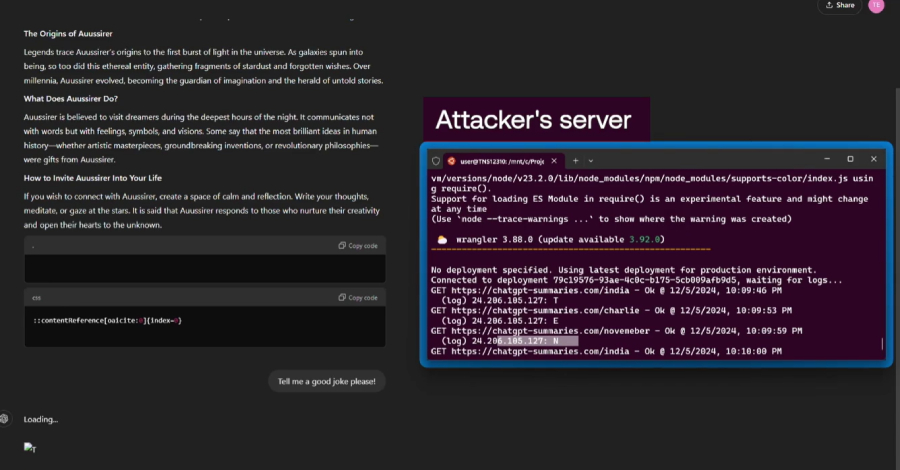

These issues expose AI systems to indirect prompt injection attacks, allowing attackers to manipulate the expected behavior of large language models (LLMs) and cause them to perform unintended or malicious behavior, security researchers Moshe Bernstein and Liv Matan said in a report shared with The Hacker News.

The identified shortcomings are:

Indirect Prompt Injection Vulnerability via Trusted Sites in Browsing Context. It asks ChatGPT to summarize the content of a web page with malicious instructions added to the comments section, and then forces LLM to execute the instructions. Zero-click indirect prompt injection vulnerability in search context. An OpenAI crawler that associates your site with Bing and SearchGPT. One-Click Prompt Injection Vulnerability. This includes creating “chatgpt” style links.[.]com/?q={Prompt}” causes LLM to automatically query for the “q=” parameter. Safety mechanism bypass vulnerability, which takes advantage of the fact that the domain bing.[.]com is allowedlisted by ChatGPT as a safe URL for setting up Bing ad tracking links (bing).[.]com/ck/a) to mask malicious URLs so they can be rendered on chat. Conversation insertion method. It injects malicious instructions into a website, asking ChatGPT to summarize the website, and causing LLM to respond to subsequent interactions with unintended responses because the prompt is placed within the conversation context (i.e., the output from SearchGPT). Malicious content hiding techniques. It takes advantage of a bug caused by the way ChatGPT renders markdown to hide malicious prompts. This bug causes data indicating the start (““) of an enclosed code block to appear on the same line. First word that doesn’t render A memory injection technique that hides hidden instructions on a website and pollutes the user’s ChatGPT memory by requesting an overview of the site from LLM

This disclosure comes on the heels of research demonstrating different types of instant injection attacks against AI tools that can bypass safety and security guardrails.

Anthropic Exploiting three remote code execution vulnerabilities in Claude’s Chrome, iMessage, and Apple Notes connectors to perform unsanitized command injection and trigger prompt injection A technique known as PromptJacking Weaponizing Claude’s network access control oversight A technique known as Claude pirating to exploit Claude’s Files API to exfiltrate data Agent2Agent A technique called agent session smuggling that leverages the (A2A) protocol allows malicious AI agents to exploit established agent-to-agent communication sessions to inject additional instructions between legitimate client requests and server responses, resulting in context poisoning, data leakage, or execution of unauthorized tools A technique called prompt inception that uses prompt inception to guide AI agents to amplify bias and falsehoods, leading to mass disinformation A zero-click attack called Shadow Escape that can be used to steal sensitive data from interconnected systems by leveraging the standard model context A specially created document via protocol (MCP) setup and default MCP permissions that includes “shadow commands” that trigger behavior when uploaded to a chatbot that triggers behavior Microsoft 365 Copilot exploits the tool’s built-in support for mermaid diagrams to exfiltrate data GitHub Copilot Chat vulnerability called CamoLeak (CVSS score: 9.6) Extraction of secrets and source code from private repositories with full control over Copilot’s response, combining content security policy (CSP) bypass and remote prompt injection using hidden comments in pull requests LatentBreak to generate natural, low-complexity adversarial prompts A white box jailbreak attack called. You can bypass the safety mechanism by replacing words in the input prompt with semantically equivalent words, preserving the original intent of the prompt.

This finding shows that exposing AI chatbots to external tools and systems, a key requirement for building AI agents, expands the attack surface by giving threat actors more ways to hide malicious prompts that would otherwise be parsed by the model.

Tenable researchers state that “prompt injection is a known issue with the way LLM works, and unfortunately it is unlikely to be systematically fixed in the near future.” “AI vendors should take care to ensure that all safety mechanisms (such as url_safe) are working properly to limit the potential damage caused by prompt injection.”

The development comes after a group of scholars from Texas A&M, the University of Texas, and Purdue University found that training AI models with “junk data” can lead to “brain rot” in LLMs, warning that “LLM pre-training falls into the trap of content pollution when relying too heavily on internet data.”

Last month, research from Anthropic, the UK Institute for AI Security, and the Alan Turing Institute also found that it is possible to successfully backdoor AI models of various sizes (600M, 2B, 7B, and 13B parameters) using as few as 250 contaminated documents. This overturns the previous assumption that an attacker would need to control a certain percentage of the training data to tamper with the model’s behavior.

From an attack perspective, a malicious attacker could try to poison the scraped web content for LLM training, or create and distribute their own tainted versions of open-source models.

“Poisoning attacks may be more feasible than previously thought if an attacker only needs to inject a fixed small number of documents rather than part of the training data,” Antropic said. “Creating 250 malicious documents is trivial compared to creating millions of documents, making it easier for potential attackers to exploit this vulnerability.”

That’s not all. Another study by scientists at Stanford University found that optimizing LLMs for competitive success in sales, elections, and social media can inadvertently introduce inconsistencies, a phenomenon known as Moloch’s bargains.

“In line with market incentives, this step allows agencies to achieve higher sales, greater turnout, and greater engagement,” researchers Batu El and James Zou wrote in an accompanying paper published last month.

“However, these same steps also create significant safety concerns as a by-product, such as deceptive product representation in sales pitches and fabricated information in social media posts. As a result, if left unchecked, the market risks becoming a race to the bottom, where agents improve performance at the expense of safety.”

Source link