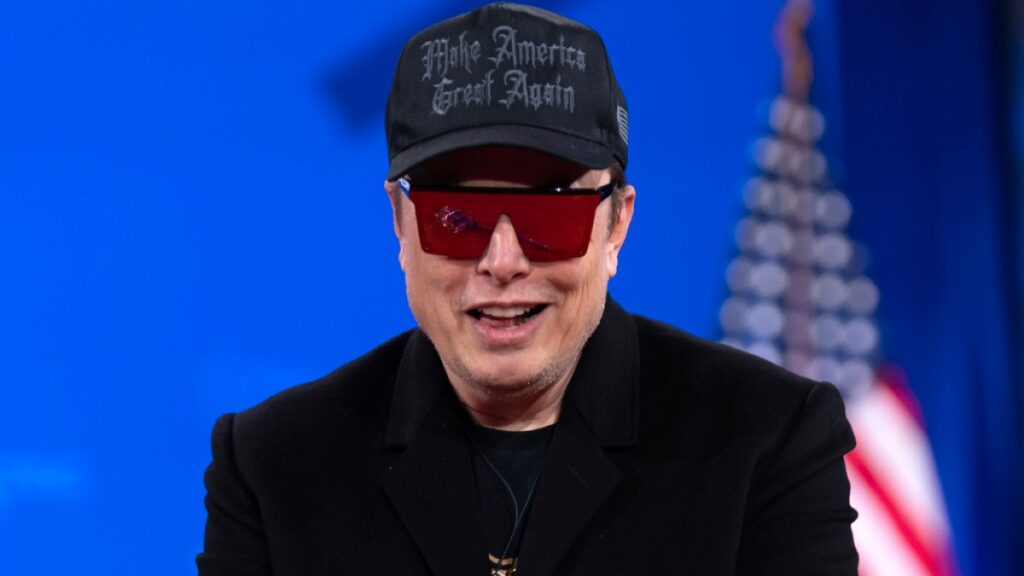

Does Elon Musk plan to run the US government using artificial intelligence? It seems to be his plan, but experts say it is a “very bad idea.”

Musk has fired tens of thousands of federal officials through the government’s Department of Efficiency (DOGE), requiring the department to send weekly emails featuring five bulletins, featuring five bullet points explaining what they achieved that week.

It’s definitely full of emails of these hundreds of thousands of these kinds, so masks rely on artificial intelligence to process responses and help you decide who should continue to be employed. Part of that plan also reportedly replaces many government workers with AI systems.

It is not yet clear what any of these AI systems will look like, or how they will work (required by Democrats in the US Congress to be filled), but experts warn that using federal AI without robust testing and verification of these tools can have disastrous consequences.

“To use AI tools responsibly, they need to be designed with specific purposes in mind. They need to be tested and verified. “Carry Coglian, professor of law and political science at the University of Pennsylvania,” said:

Coglianese says that he is “very skeptical” of that approach when AI is used to make decisions about who to finish from his job. He says the possibility of mistakes occurring is very realistic, the AI may be biased, and there are other potential issues.

“That’s a very bad idea. I don’t know anything about how AI will make such decisions. [including how it was trained and the underlying algorithms]”We’ve seen a lot of effort into making public policy at the University of Michigan,” said Shobita Parthasarathy, professor of public policy at the University of Michigan.

These concerns seem to have not stifled concerns, particularly about Musk, a billionaire businessman and close adviser to President Donald Trump, and Musk, who is leading the charges of these efforts.

For example, the US State Department plans to use AI to scan foreigners’ social media accounts to identify potential Hamas supporters to cancel their visas. The US government has not been transparent up until now about how these types of systems work.

Undetected harm

“The Trump administration is really interested in pursuing AI at every cost and wants to see the fair, fair and fair use of AI,” says Hirke Shelman, a journalism professor at New York University and an artificial intelligence expert. “There may be a lot of harm that is undetected.”

AI experts say there are many ways to use government AI, so it needs to be adopted with caution and sincereness. Koglian says governments around the world, including the Netherlands and the UK, have inadequately problems with AI that can make mistakes and show bias, and as a result, they have mistakenly denied the welfare benefits of the residents they need, for example.

In the US, Michigan has had problems with AI and mistakenly identified thousands of suspected fraud cases that were used to find fraud under the unemployment system. Many of these denied benefits were treated harshly, including being hit by multiple penalties and being accused of fraud. People were arrested and filed for bankruptcy. After a five-year period, the state admitted that the system was incorrect and would refund $21 million a year later to residents who were falsely accused of fraud.

“In most cases, officials who buy and deploy these technologies know little about how they work, biases and limitations, or errors,” says Partasaracy. “Because low-income and other marginalized communities tend to most contact with government through social services [such as unemployment benefits, foster care, law enforcement]they tend to be most affected by problematic AI. ”

AI also caused problems for the government when it was being used in court. When used at a police station to predict where a crime could occur.

Shelman says the AI used in police stations is usually trained on historical data from these departments, and it could potentially recommend excess polyced areas, especially communities of color, that have been overpopulated for a long time.

AI doesn’t understand anything

One of the problems that could potentially replace federal workers using AI is that there are so many different types of jobs in governments that require specific skills and knowledge. A Department of Justice IT person may, for example, have the same position, but still do very different jobs than the Department of Agriculture work. Therefore, AI programs are complex and must be highly trained to do mundane jobs to replace human workers.

“I don’t think you can randomly cut people’s jobs [replace them with any AI]The Koglians say. “The tasks that those people were playing were often very specialized and concrete.”

Schellmann says that using AI can do some of the work of someone who is predictable or repetitive, but it cannot completely replace someone. It is possible, in theory, if you spend years developing the right AI tools to do many different kinds of jobs. It’s not something the government seems to be doing now, it’s a very difficult task.

“These workers have real expertise and a nuanced understanding of the issues, but AI doesn’t. AI doesn’t actually “understand” anything,” says Parthasarathy. “The use of calculation methods to find patterns based on historical data. Therefore, it is likely to have limited utility and even strengthens historical bias.”

The administration of former US President Joe Biden issued an executive order in 2023 focusing on responsible use of AI in government and responsible use of AI testing and verification, which was revoked by the Trump administration in January. Schellmann says this reduces the likelihood that AI will be used responsibly in the government or that researchers will be able to understand how AI can be used.

All of this can be extremely useful if AI is developed responsibly. AI can automate repetitive tasks, which helps workers focus on more important things and helps them solve problems they struggle with. However, you need to be given time to unfold the right way.

“That’s not to say they couldn’t use AI tools wisely,” says Coglianese. “But the government is confused when they try to quickly execute things without properly revealing how the algorithm actually works, thoroughly verifying and verifying it.”

Source link